May 21, 2025

Overview of Google I/O benchmarking results: Google firing on all cylinders with launches across LLMs, image, video, music and more

Updated 8 June 2025 to reflect Gemini 2.5 Pro update the week following I/O

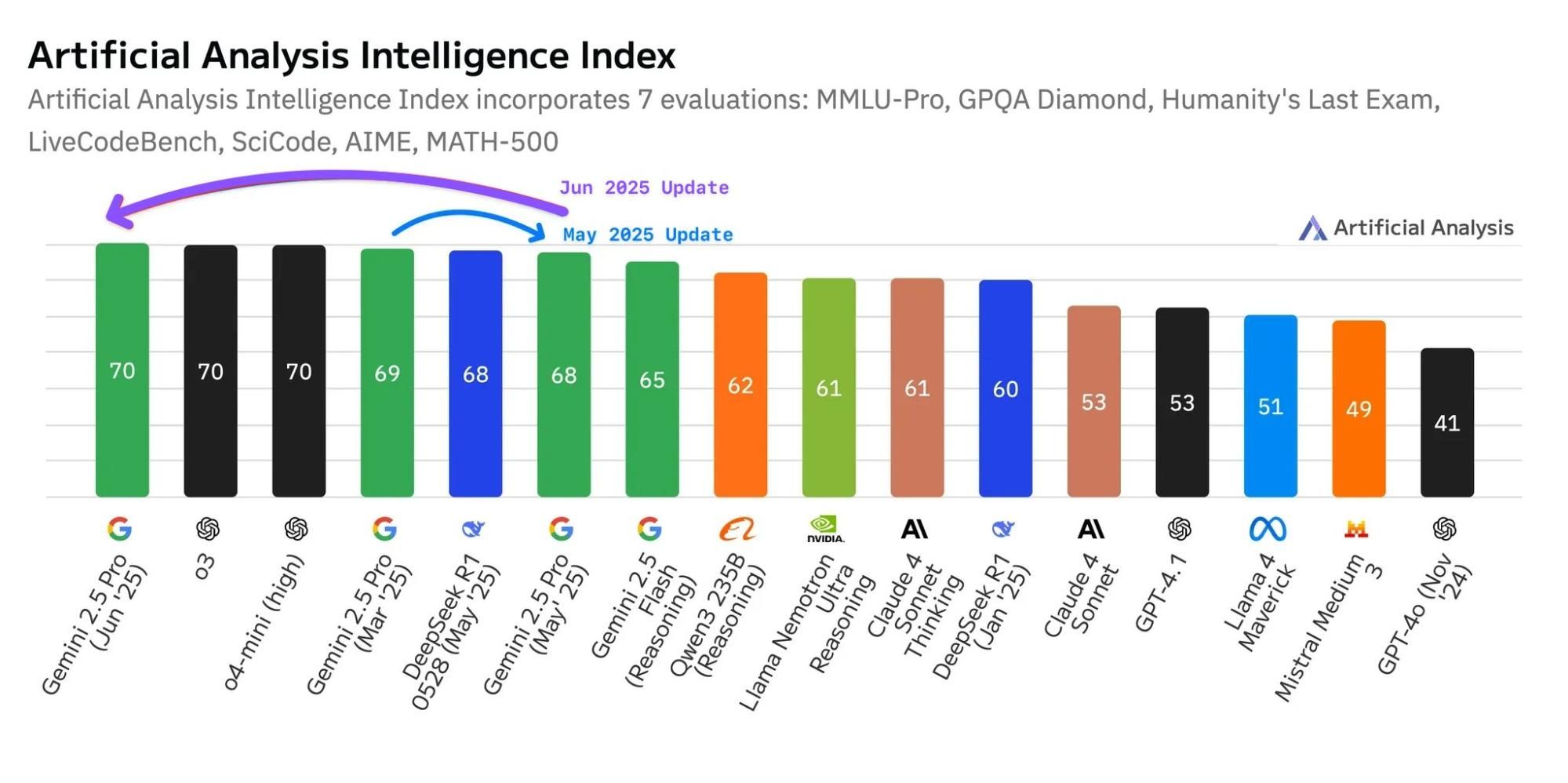

Language models: Google's updated Gemini 2.5 Pro now leads the AI intelligence frontier, matching OpenAI's o3 in our independent benchmarks

Google's May update of Gemini 2.5 Pro regressed in some performance evaluations compared to the initial March release. This June update not only fixes previous regressions but delivers significant improvements across our independent benchmarks.

Key highlights:

- #1 Position across evals: Gemini 2.5 Pro (June) now leads across a range of evals including MMLU-Pro (86%), GPQA Diamond (84%), Humanity's Last Exam (21%)

- Leading coding performance: 80% on LiveCodeBench, matching o4-mini (high)

- Variable reasoning budget: Users can vary the maximum amount of output tokens that can be used for 'thinking', allocating more tokens as needed for harder tasks

Gemini 2.5 Pro (June) leads across a range of evals

Gemini 2.5 Pro (June) leads across a range of evals

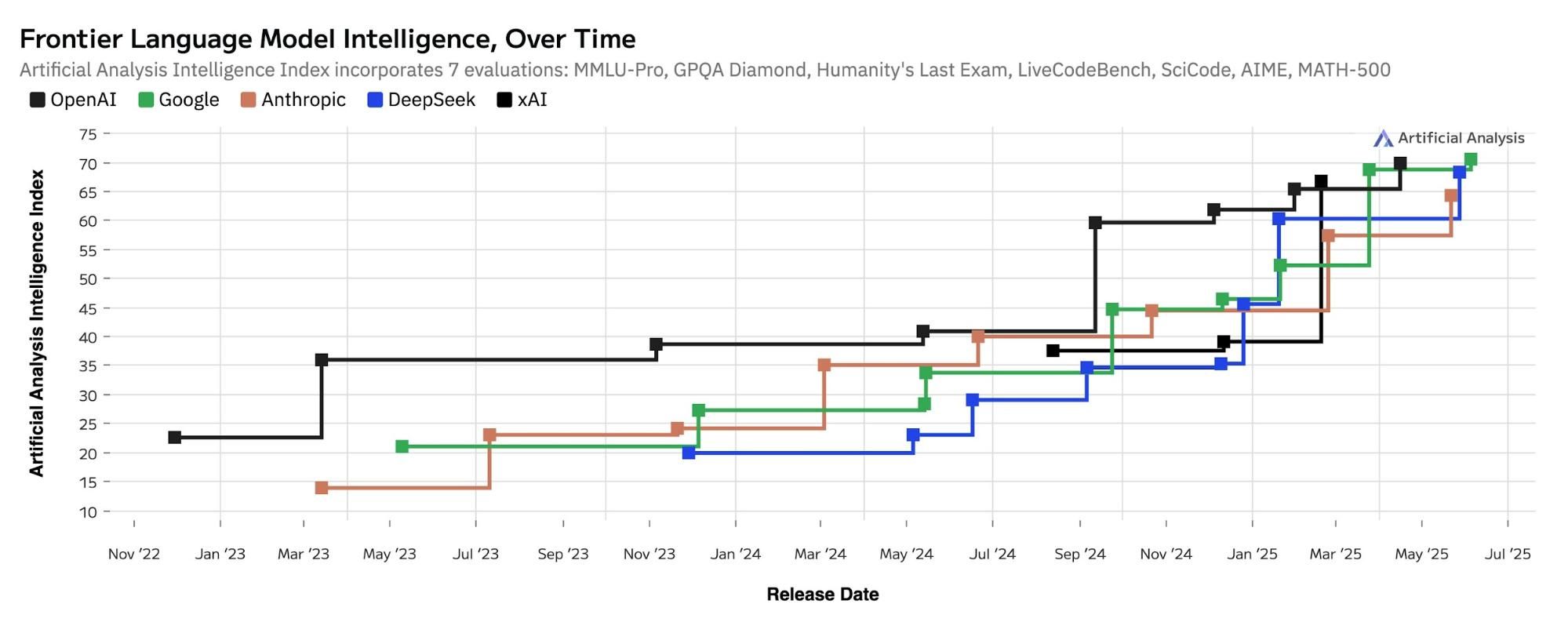

Google draws alongside OpenAI in the language model frontier

Google draws alongside OpenAI in the language model frontier

Google also released an updated version of Gemini 2.5 Flash today at I/O. In reasoning mode, the model's intelligence rises above Qwen3 235B-A22B, and in non-reasoning mode it is now equivalent to GPT-4.1 and DeepSeek V3.

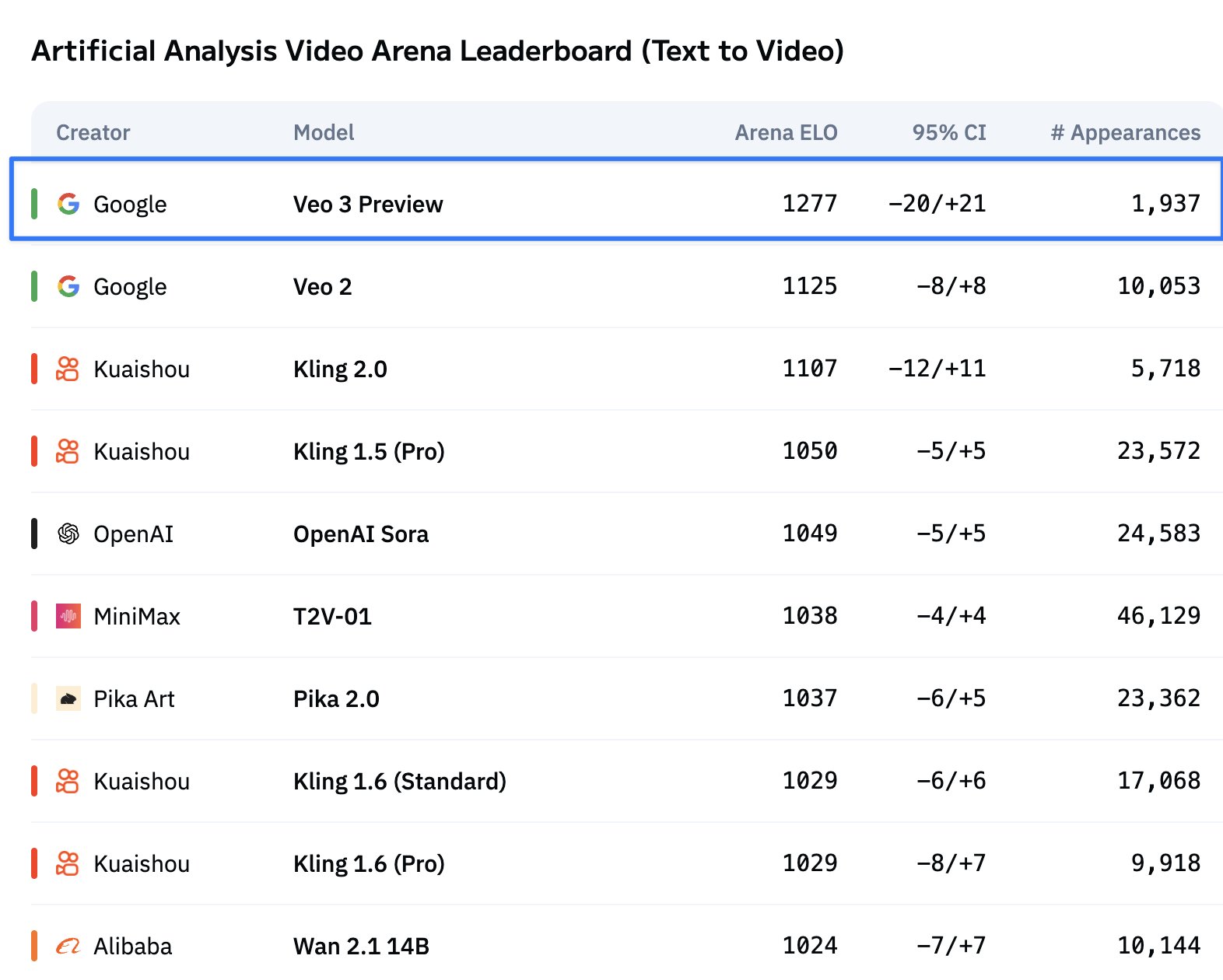

Video models: Veo 3 debuts on the Artificial Analysis Video Arena Leaderboard in first place, with a significant lead over Google's own Veo 2

After a day of voting, we can confidently declare Veo 3 Preview to be substantially better than Veo 2, putting Google well ahead of both Kuaishou's Kling 2.0, and OpenAI's Sora in our text to video leaderboard.

We have begun testing Veo 3's image to video capabilities and will be releasing those results in the next few days!

Early results show Veo 3 topping the Text to Video leaderboard

Early results show Veo 3 topping the Text to Video leaderboard

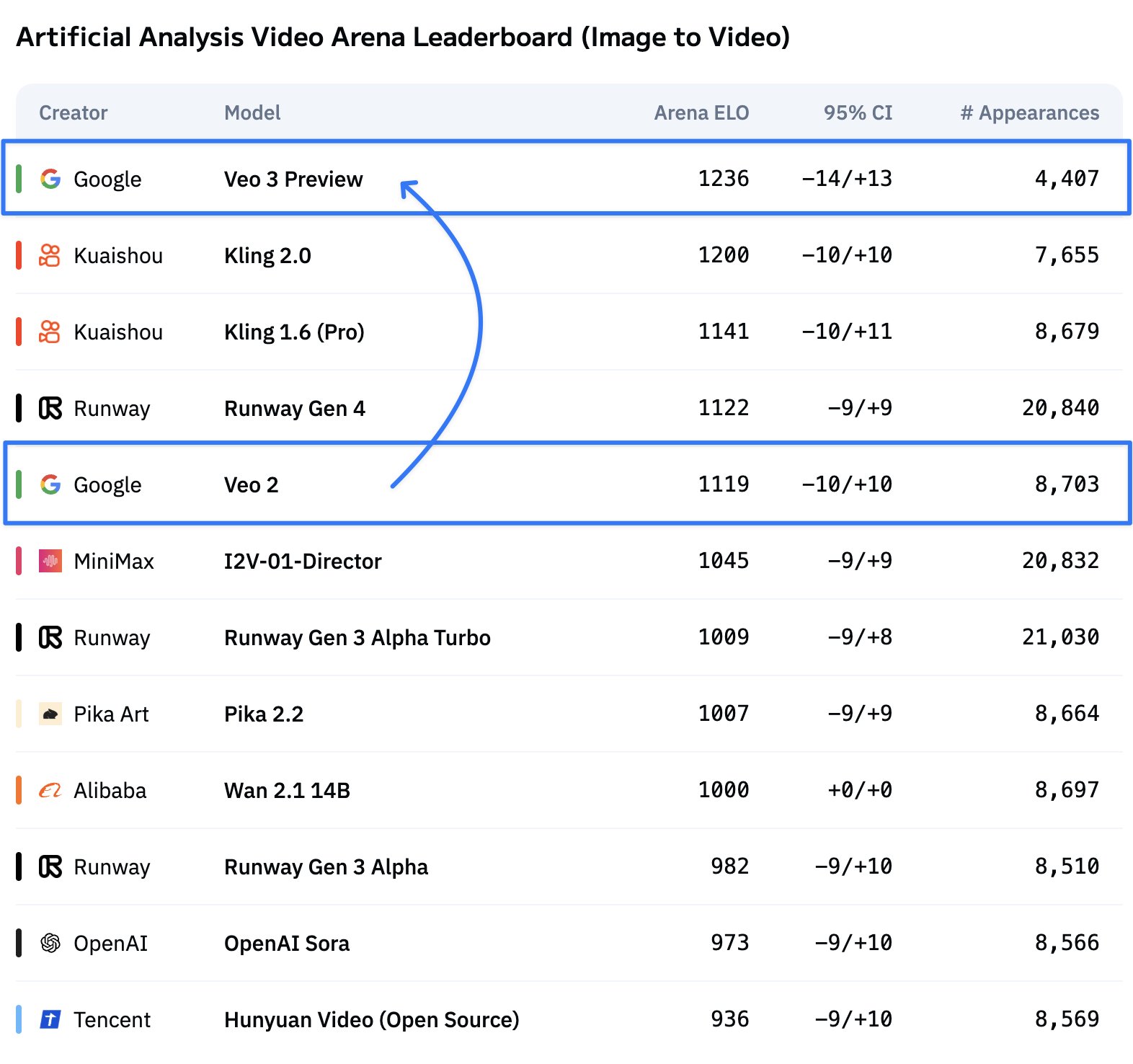

Veo 3 is also now the first model to top both the Image to Video and Text to Video leaderboards, outperforming Kling 2.0 and Runway Gen 4 to secure the #1 spot across both modalities!

Veo 3 also tops the Image to Video leaderboard

Veo 3 also tops the Image to Video leaderboard

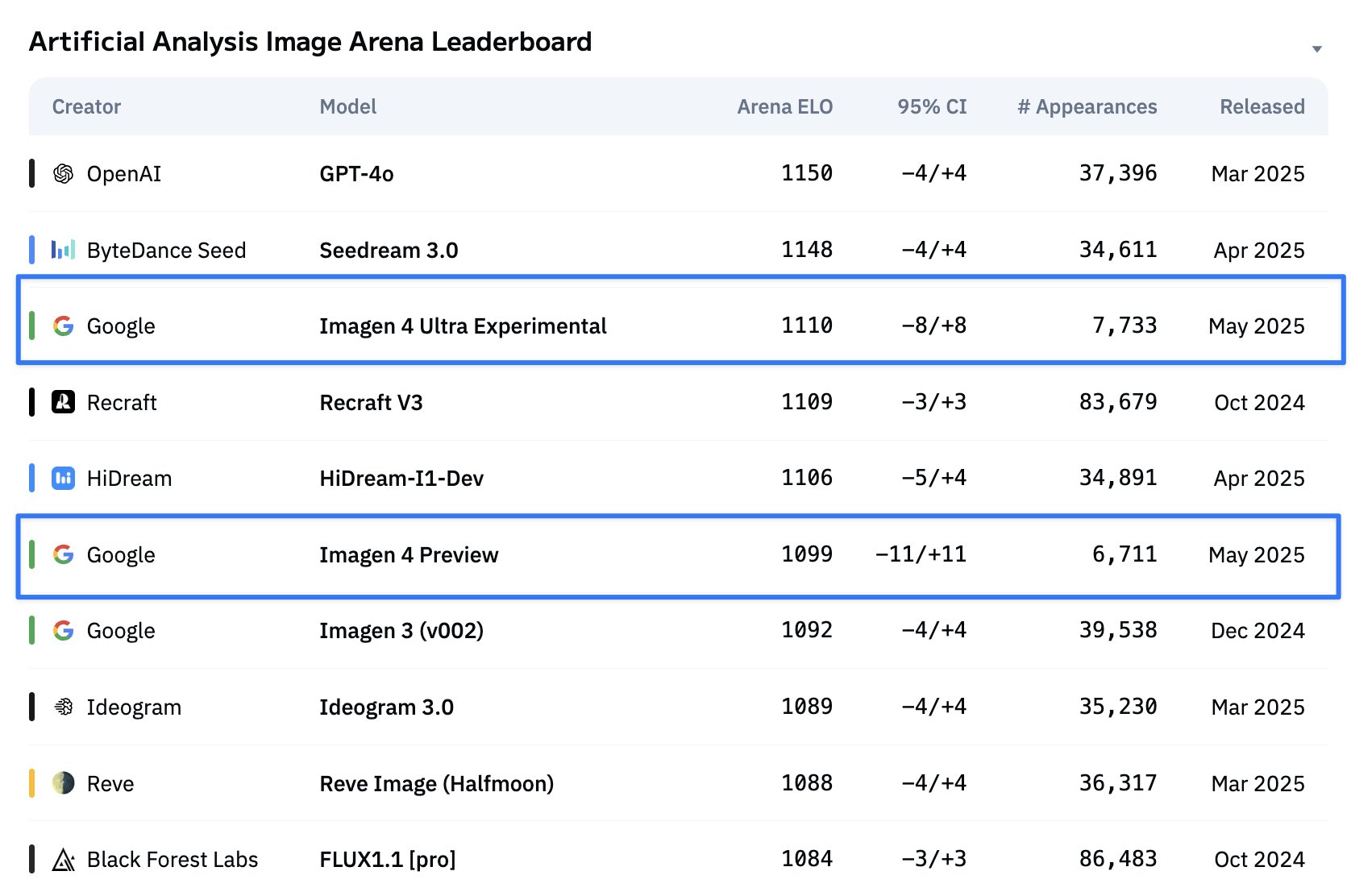

Image models: Google's new Imagen 4 Ultra comes 3rd in the Artificial Analysis Image Arena, falling just short of OpenAI's GPT-4o and ByteDance's Seedream 3.0

After a few days in the Artificial Analysis Image Arena, Imagen 4 Ultra appears to be a noticeable but small upgrade over Imagen 3, while Imagen 4 Base appears to be similar in quality to Imagen 3.

Both Imagen 4 Preview and Ultra Experimental are available to developers on Vertex AI Studio, with no pricing publicly listed. Third party provider @fal also hosts Imagen 4 Preview at $0.05/image (they also host Imagen 3 at $0.05/image). For comparison, GPT-4o costs ~$0.17/image, while Recraft V3 costs ~$0.04/image.

Imagen 4 is also available via:

- A new demo website called Whisk

- The Gemini consumer app - although we note that it is not clear inside the Gemini app which model images have been generated on

Imagen 4 Ultra Experimental and Preview both slightly outperform predecessor Imagen 3

Imagen 4 Ultra Experimental and Preview both slightly outperform predecessor Imagen 3

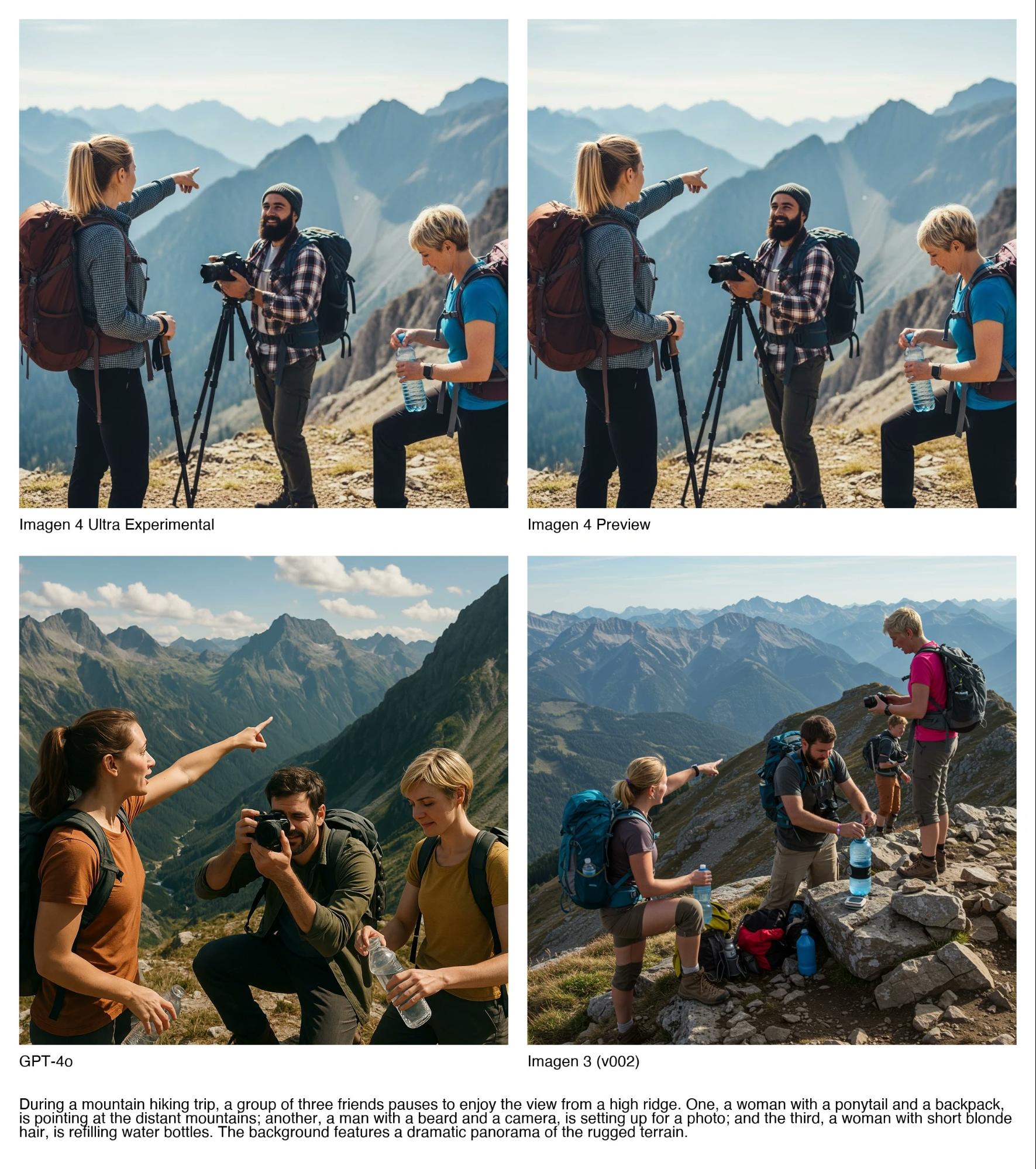

Example generations across Imagen models

Example generations across Imagen models