February 11, 2026

GLM-5: Everything You Need to Know

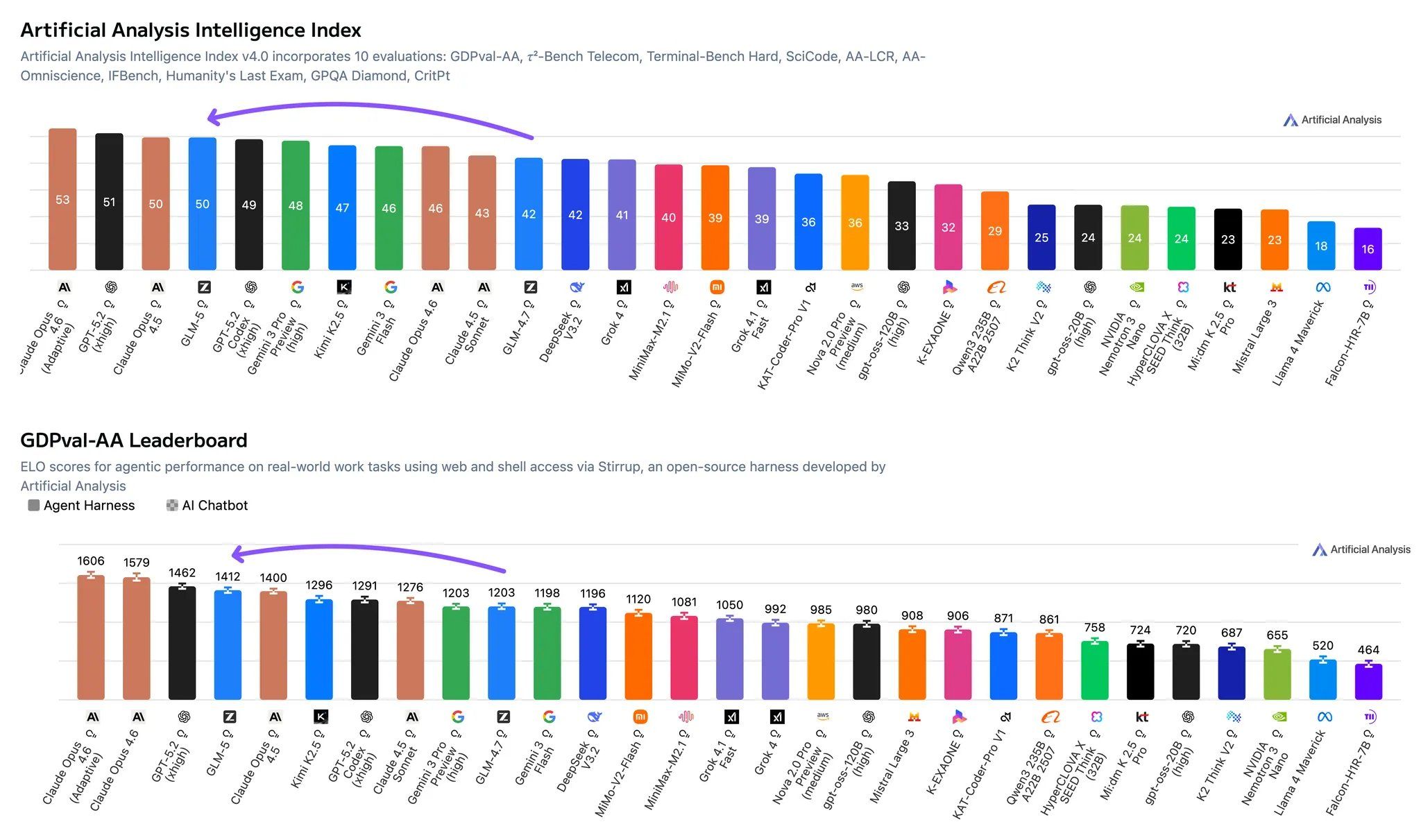

GLM-5 is the new leading open weights model! GLM-5 leads the Artificial Analysis Intelligence Index amongst open weights models and makes large gains over GLM-4.7 in GDPval-AA, our agentic benchmark focused on economically valuable work tasks

GLM-5 is Z.AI's first new architecture since GLM-4.5 - each of the GLM-4.5, 4.6 and 4.7 models were 355B total / 32B active parameter mixture of experts models. GLM-5 scales to 744B total / 40B active, and integrates DeepSeek Sparse Attention. This puts GLM-5 more in line with the parameter count of the DeepSeek V3 family (671B total / 37B active) and Moonshot’s Kimi K2 family (1T total, 32B active). However, GLM-5 is released in BF16 precision, coming in at ~1.5TB in total size - larger than DeepSeek V3 and recent Kimi K2 models that have been released natively in FP8 and INT4 precision respectively.

Key takeaways: ➤ GLM-5 scores 50 on the Intelligence Index and is the new open weights leader, up from GLM-4.7's score of 42 - an 8 point jump driven by improvements across agentic performance and knowledge/hallucination. This is the first time an open weights model has achieved a score of 50 or above on the Artificial Analysis Intelligence Index v4.0, representing a significant closing of the gap between proprietary and open weights models. It places above other frontier open weights models such as Kimi K2.5, MiniMax 2.1 and DeepSeek V3.2.

➤ GLM-5 achieves the highest Artificial Analysis Agentic Index score among open weights models with a score of 63, ranking third overall. This is driven by strong performance in GDPval-AA, our primary metric for general agentic performance on knowledge work tasks from preparing presentations and data analysis through to video editing. GLM-5 has a GDPval-AA ELO of 1412, only below Claude Opus 4.6 and GPT-5.2 (xhigh). GLM-5 represents a significant uplift in open weights models' performance on real-world economically valuable work tasks

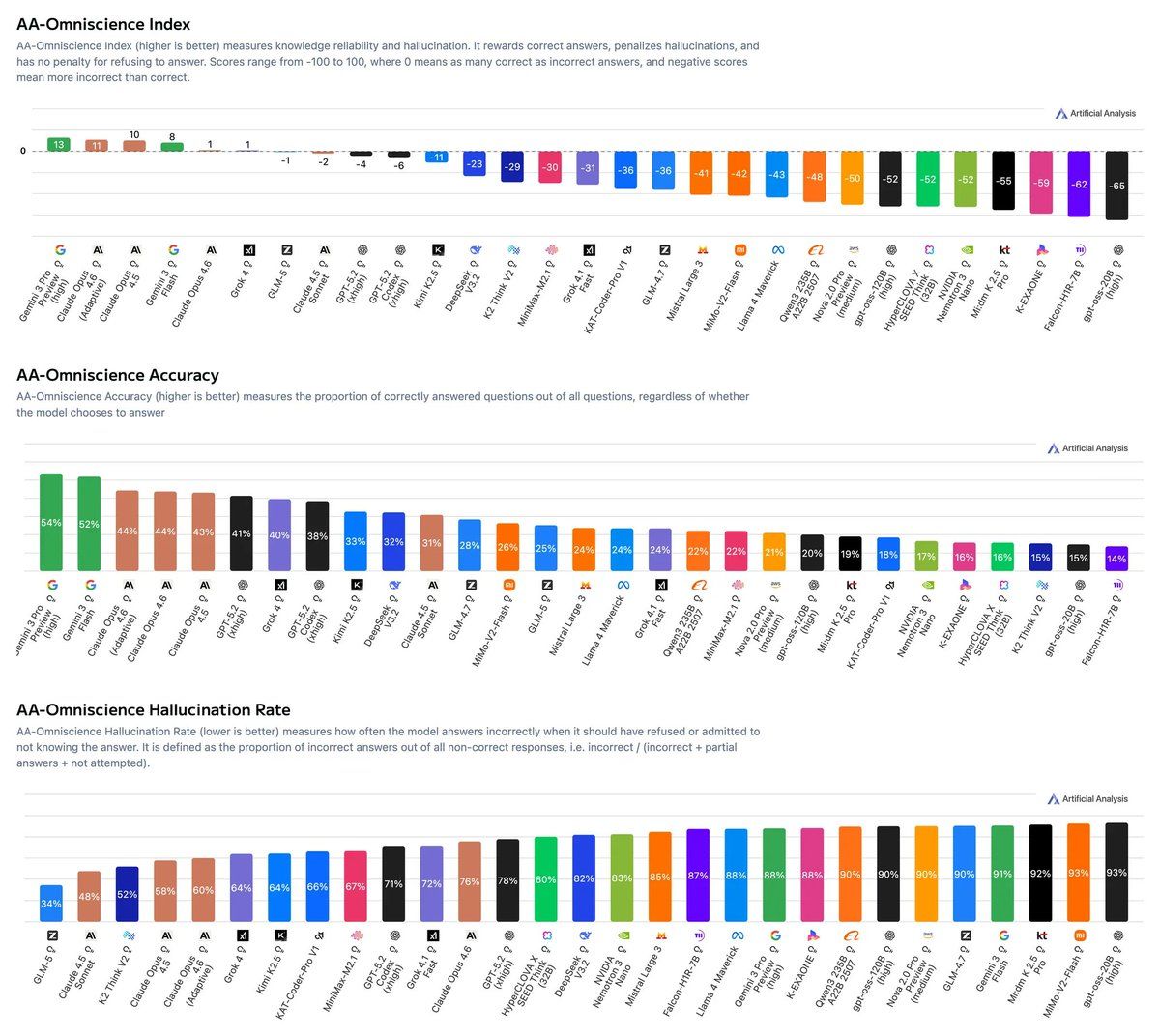

➤ GLM-5 shows a large improvement on the AA-Omniscience Index, driven by reduced hallucination. GLM-5 scores -1 on the AA-Omniscience Index - a 35 point improvement compared to GLM-4.7 (Reasoning, -36). This is driven by a 56 p.p reduction in the hallucination rate compared to GLM-4.7 (Reasoning). GLM-5 achieves this by abstaining more frequently and has the lowest level of hallucination amongst models tested

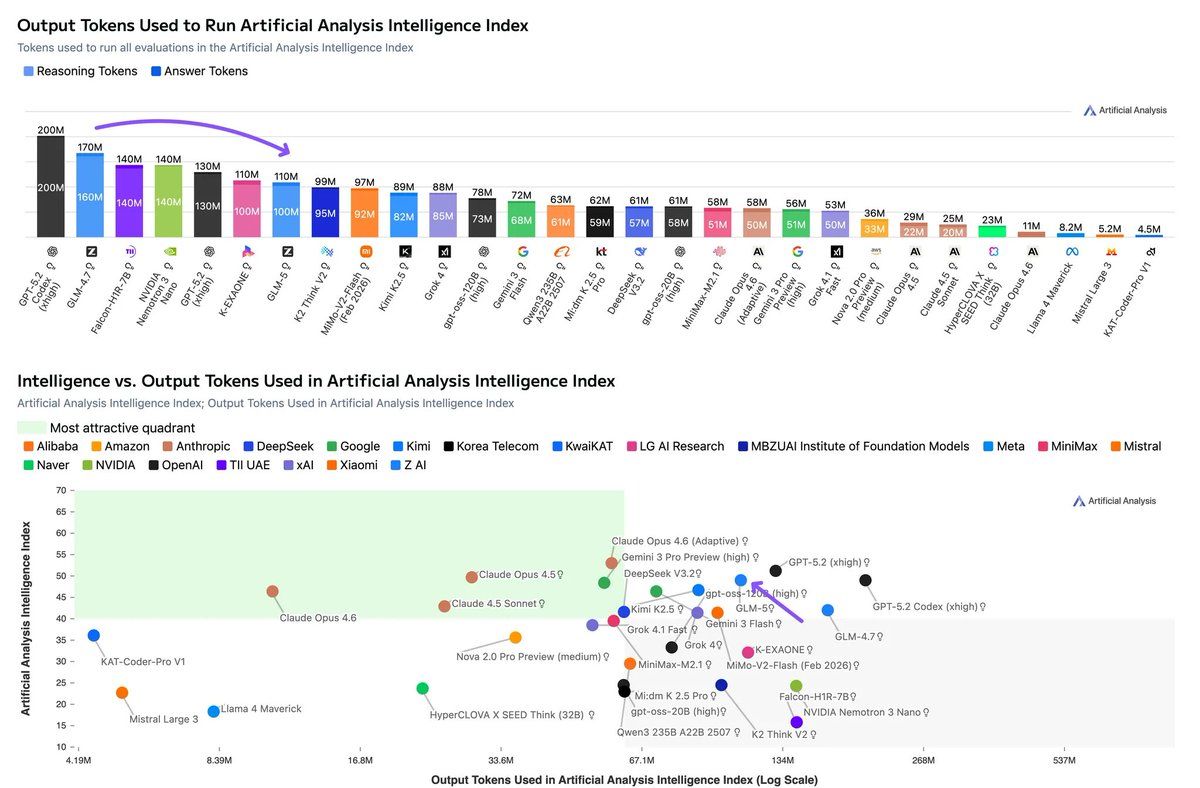

➤ GLM-5 used ~110M output tokens to run the Intelligence Index, compared to GLM-4.7's ~170M output tokens, a significant decrease despite higher scores across most evaluations. This pushes GLM-5 closer towards the frontier of the Intelligence vs. Output Tokens chart, but is less token efficient compared to Opus 4.6

Key model details: ➤ Context window: 200K tokens, equivalent to GLM-4.7 Multimodality: Text input and output only - Kimi K2.5 remains the leading open weights model to support image input

➤ Size: 744B total parameters, 40B active parameters. For self-deployment, GLM-5 will require ~1,490GB of memory to store the weights in native BF16 precision

➤ Licensing: MIT License

Availability: At the time of sharing this analysis, GLM-5 is available on Z AI's first-party API and several third-party APIs such as Novita ($1/$3.2 per 1M input/output tokens), GMI Cloud ($1/$3.2) and DeepInfra ($0.8/$2.56), in FP8 precision

➤ Training Tokens: Z AI also indicated it has increased pre-training data volume from 23T to 28.5T tokens

Intelligence Index

Intelligence Index

GLM-5 demonstrates improvement in AA-Omniscience Index, driven by lower hallucination. This means the model is abstaining more from answering questions it does not know

Omniscience

Omniscience

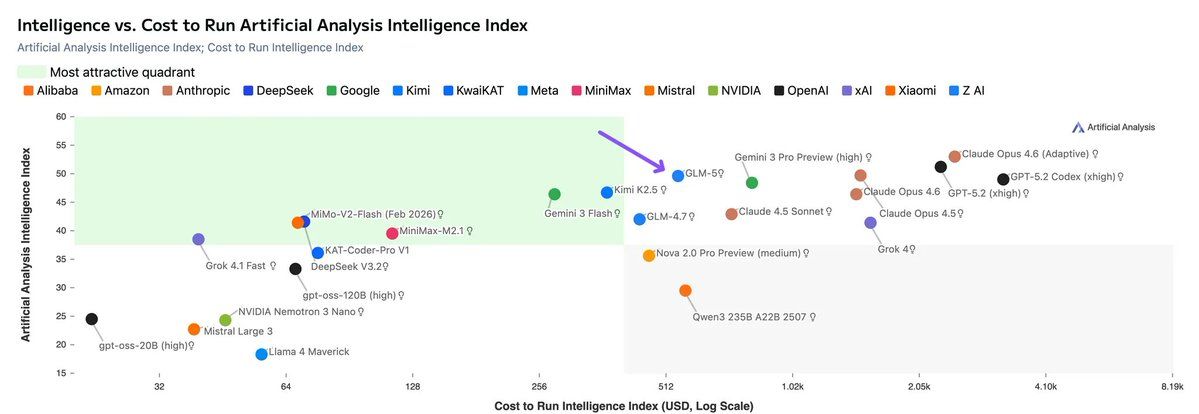

GLM-5 is on the Pareto curve of the Intelligence vs. Cost to Run the Intelligence Index chart driven by lower per token pricing compared to proprietary peers (e.g. Claude Opus, Google Gemini and OpenAI GPT-5.2) - GLM-5 cost ~$547 (based on the median per token price of third-party providers)

Intelligence vs Cost

Intelligence vs Cost

GLM-5 uses fewer output tokens than GLM-4.7 to run the Artificial Analysis Intelligence Index

Output Token

Output Token

Check out additional analysis for this model on X: https://x.com/ArtificialAnlys/status/2021678229418066004 Explore the full suite of benchmarks at https://artificialanalysis.ai/