October 27, 2025

MiniMax M2 Benchmarks and Analysis

MiniMax's M2 achieves a new all-time-high Intelligence Index score for an open weights model and offers impressive efficiency with only 10B active parameters (200B total)

Key takeaways:

- Efficiency to serve at scale: MiniMax-M2 has 200B total parameters and is very sparse with only 10B active parameters per forward pass. Such few active parameters allow the model to be served efficiently at scale (DeepSeek V3.2 has 671B total and 37B active, Qwen3 has 235B total and 22B active). The model can also easily fit on 4xH100s at FP8 precision

- Strengths focus on agentic use-cases: The model's strengths include tool use and instruction following (as shown by Tau2 Bench and IFBench). As such, while M2 likely excels at agentic use cases it may underperform other open weights leaders such as DeepSeek V3.2 and Qwen3 235B at some generalist tasks. This is in line with a number of recent open weights model releases from Chinese AI labs which focus on agentic capabilities, likely pointing to a heavy post-training emphasis on RL. Similar to most other leading open weights models, M2 is a text only model - Alibaba's recent Qwen3 VL releases remain the leading open weights multimodal models.

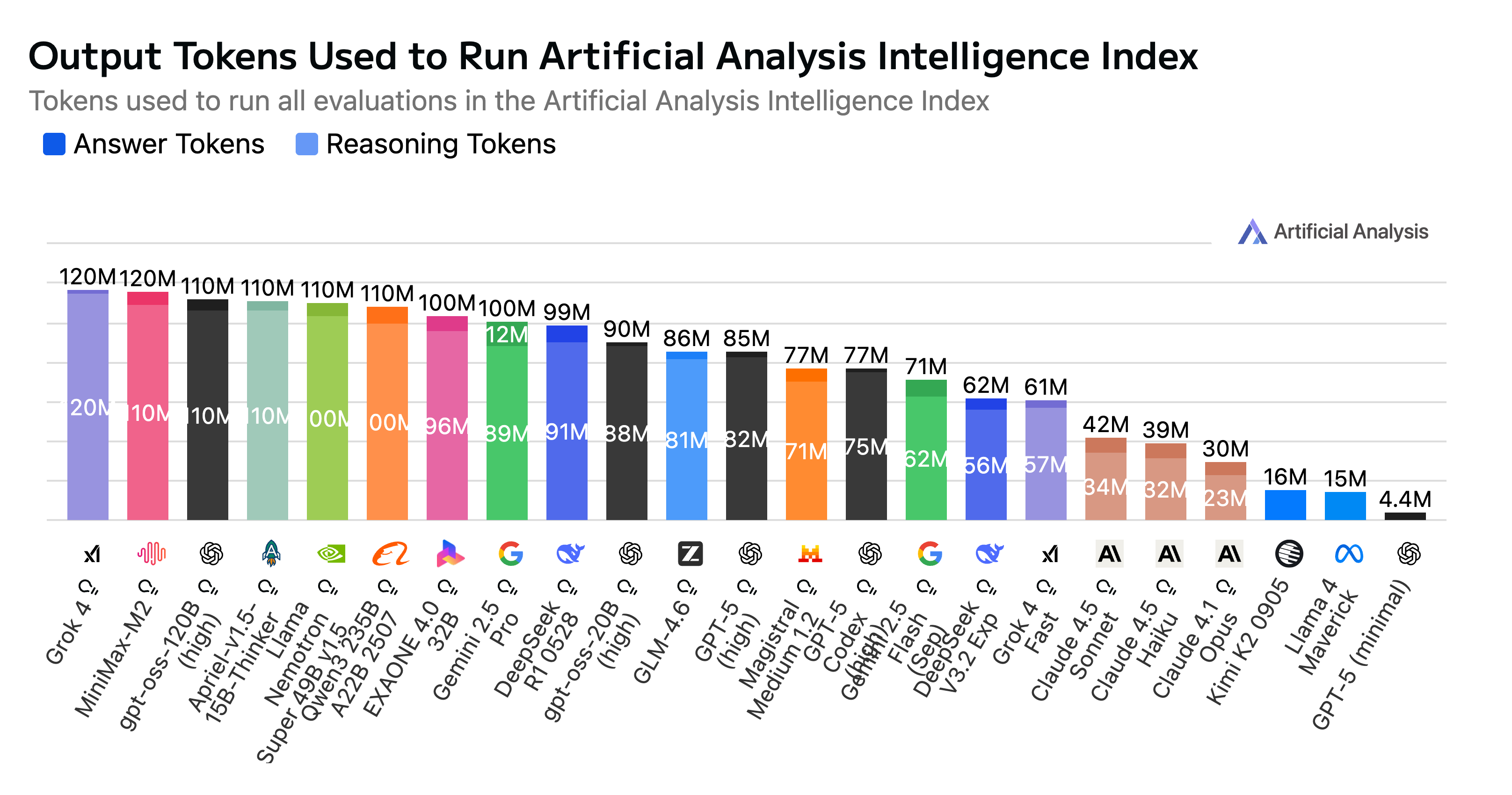

- Cost & token usage: MiniMax's API is offering the model at a very competitive per token price of $0.3/$1.2 per 1M input/output tokens. However, the model is very verbose, using 120M tokens to complete our Intelligence Index evaluations - equal highest along with Grok 4. As such, while it is a low priced model this is moderated by high token usage

- Continued leadership in open source by Chinese AI labs: MiniMax's release continues the leadership of Chinese AI labs in open source that DeepSeek kicked off in late 2024, and which has been continued by continued DeepSeek releases, Alibaba, Z AI and Moonshot AI

See below for further analysis and a link to the model on Artificial Analysis

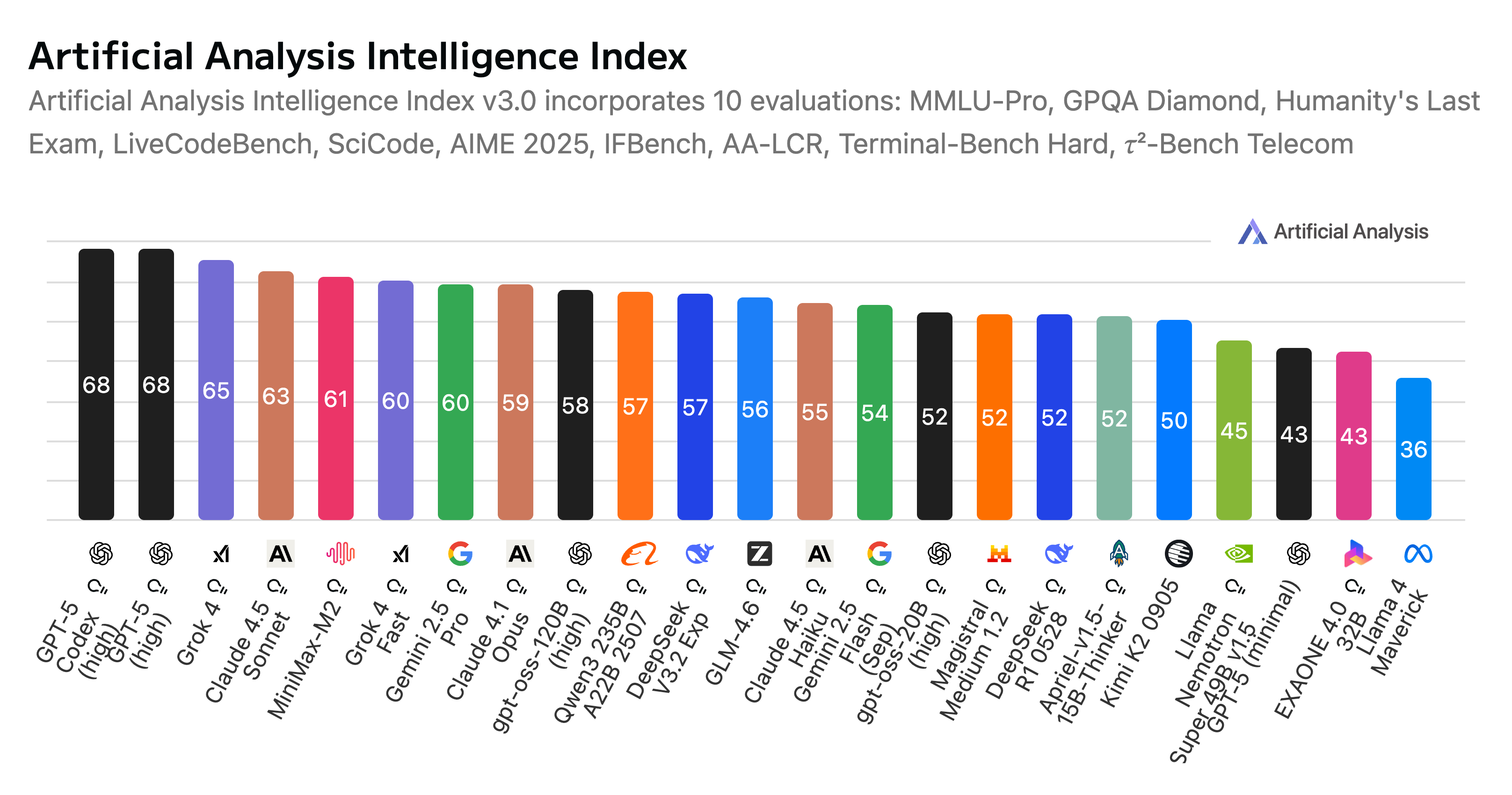

Artificial Analysis Intelligence Index

Artificial Analysis Intelligence Index

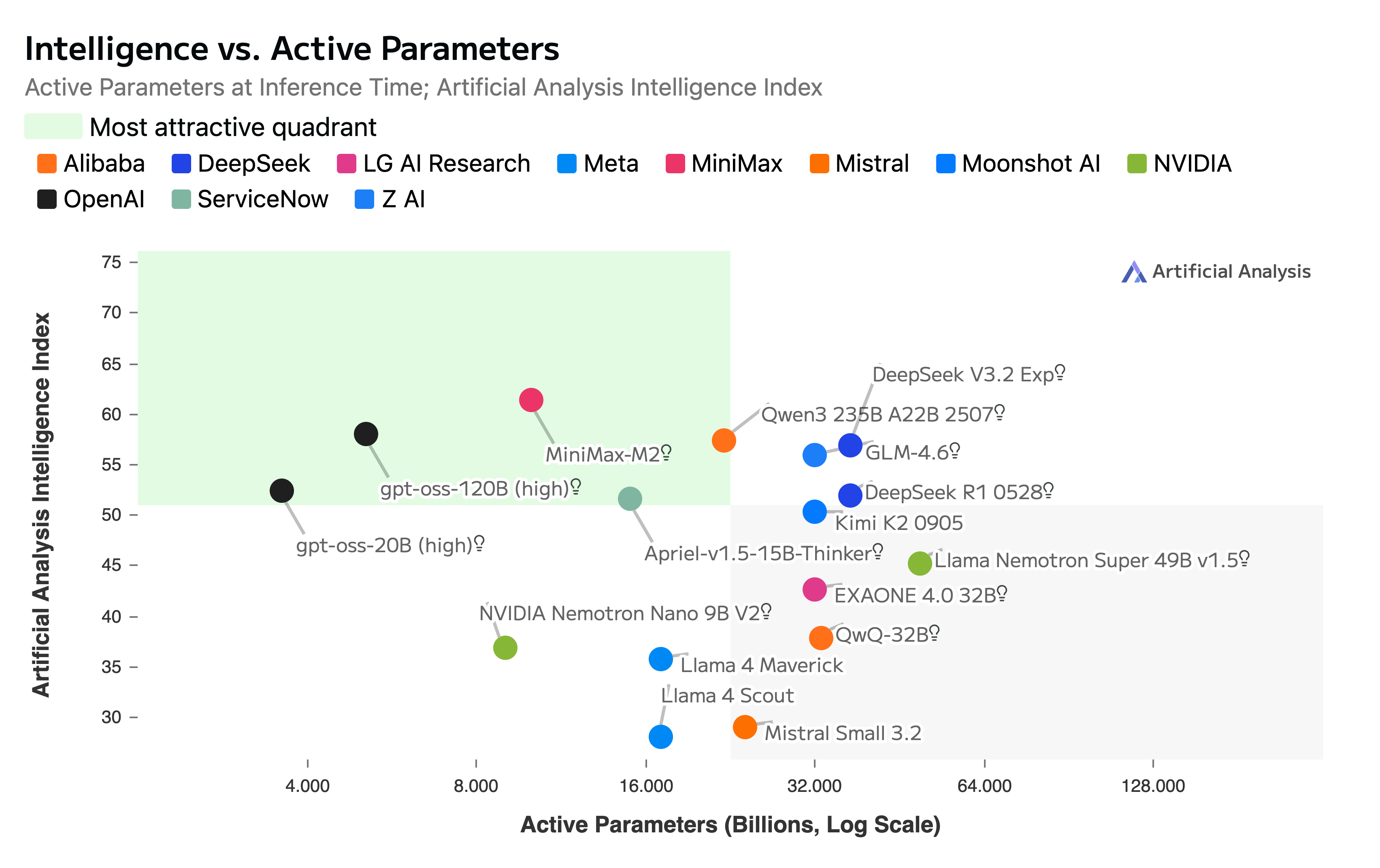

MiniMax-M2's relatively small size (200B) for its intelligence and very few active parameters (10B) support its leading intelligence vs. active parameters positioning amongst larger models

Intelligence vs Active Parameters

Intelligence vs Active Parameters

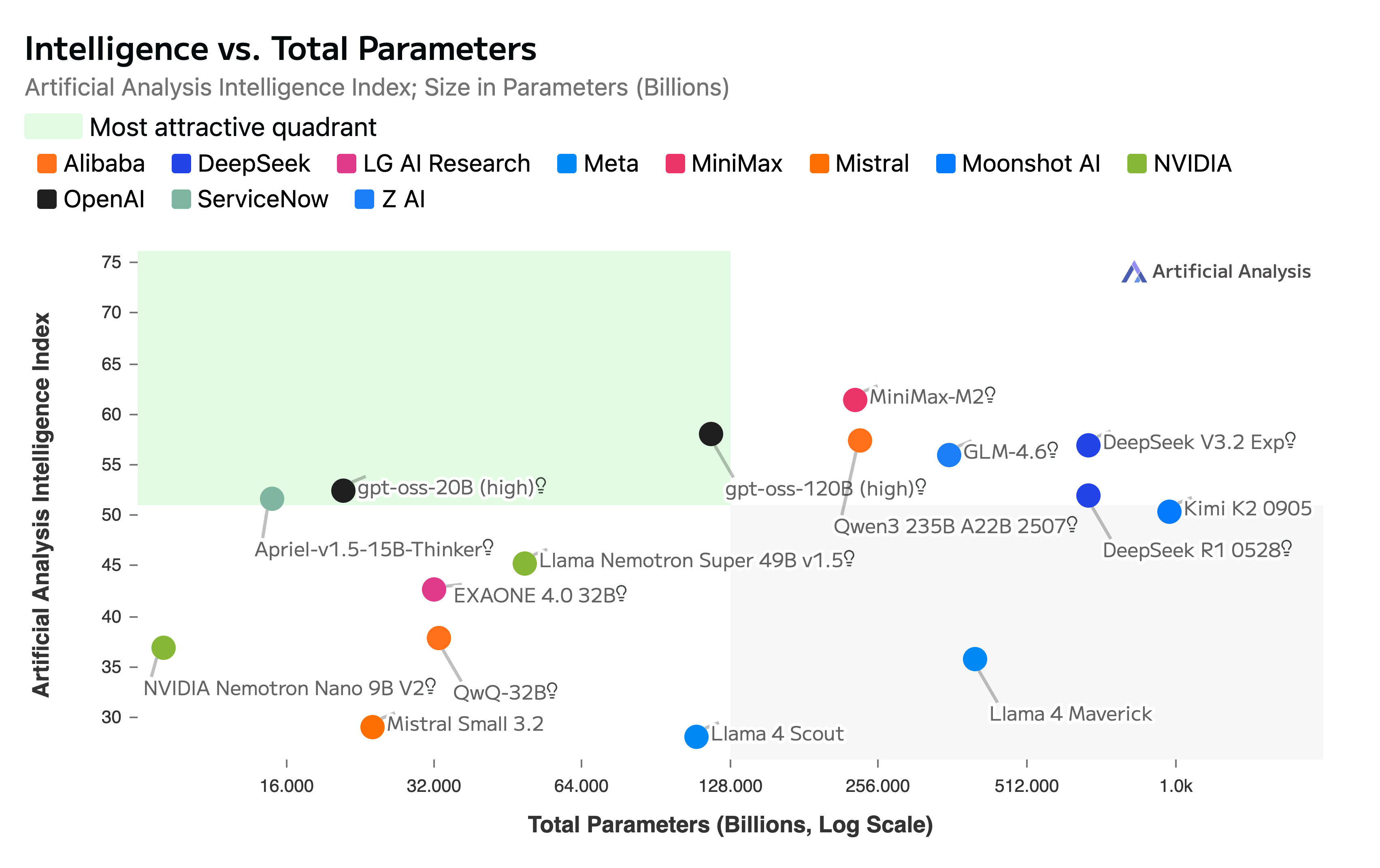

Intelligence vs Total Parameters

Intelligence vs Total Parameters

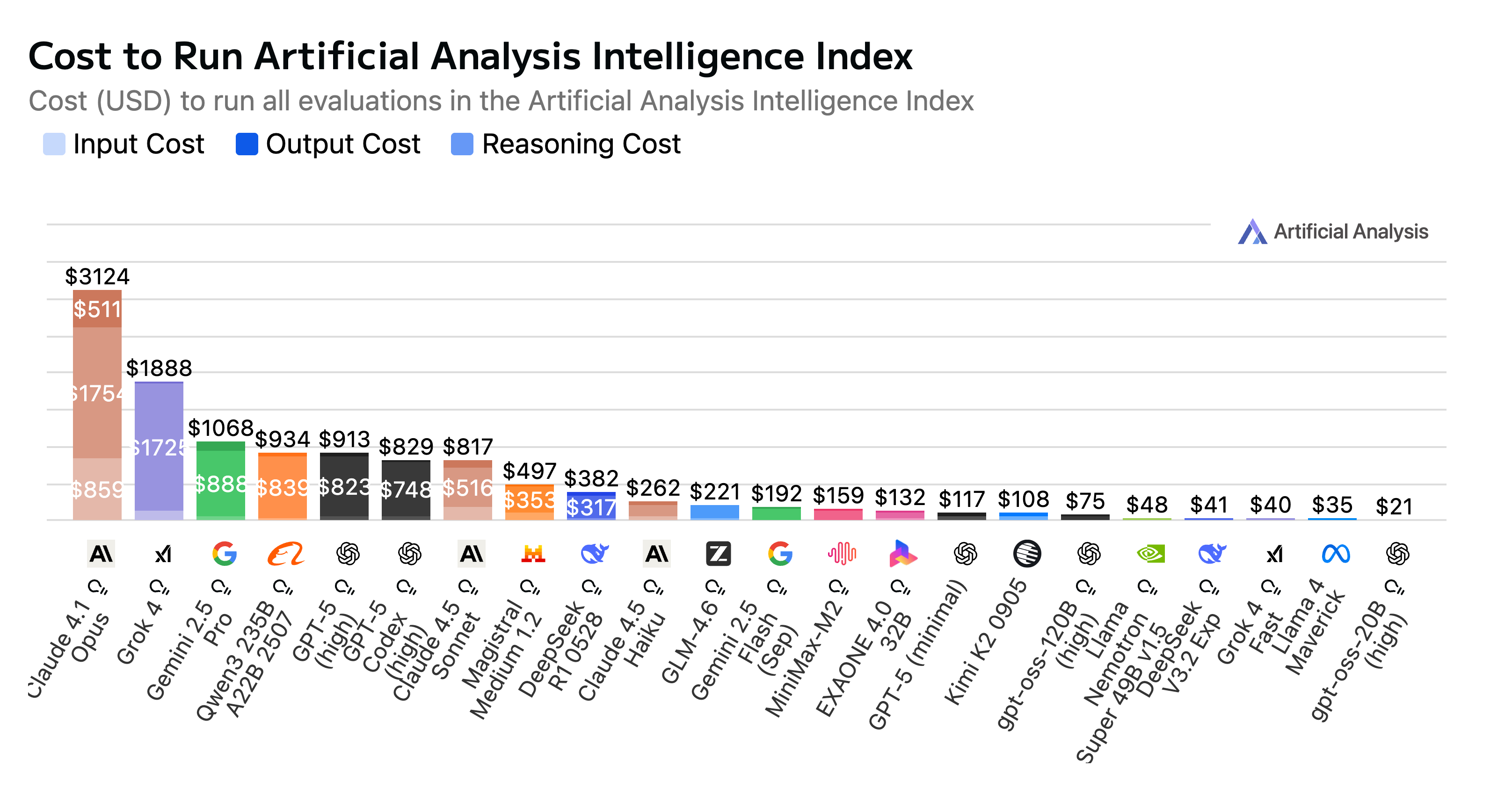

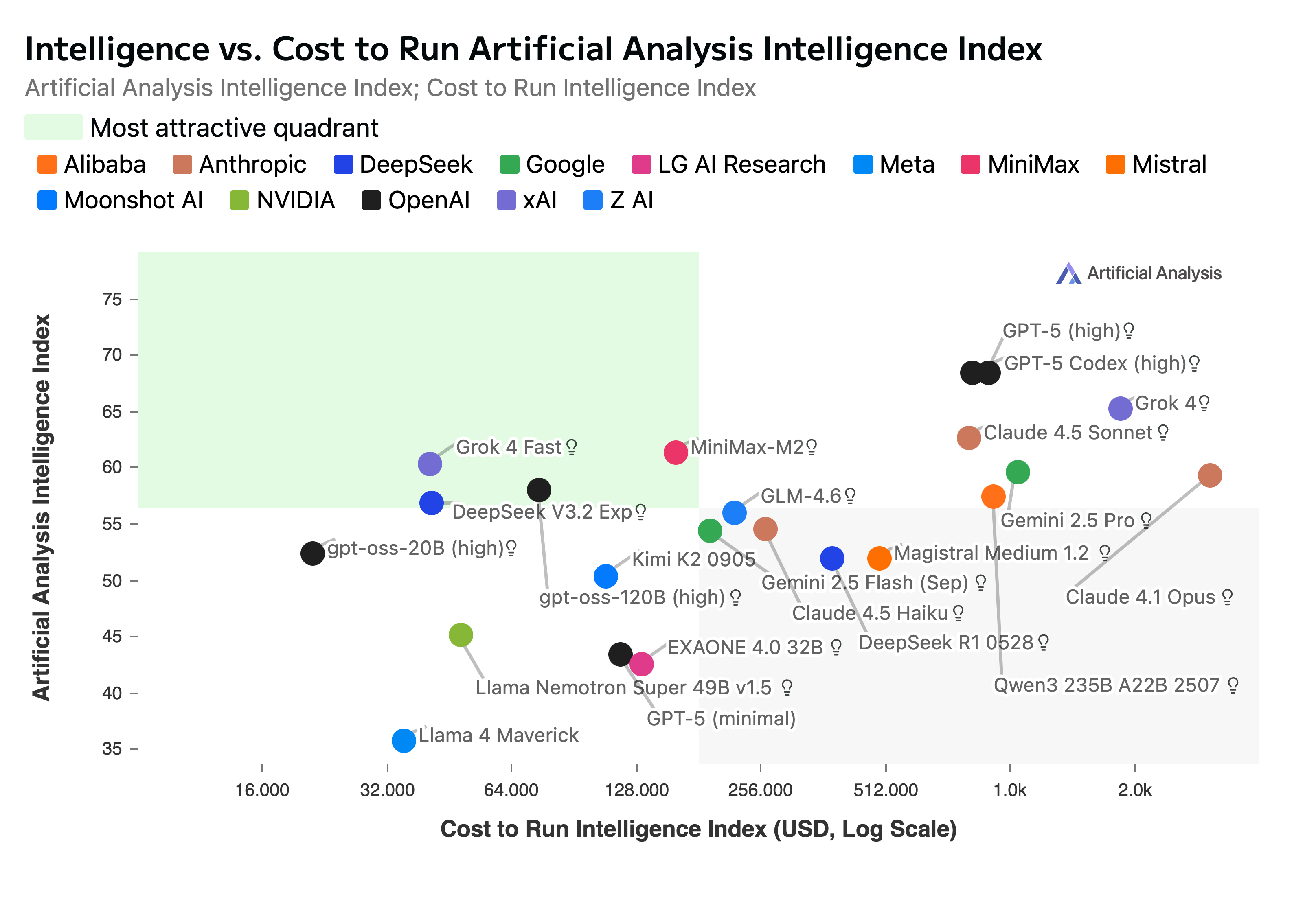

MiniMax-M2's efficiency to serve at scale supports MiniMax's competitive pricing of $0.3/$1.2 per 1M input/output tokens. However, the model is very verbose - equal highest with Grok 4. As such, while still much cheaper than many other leading models this difference is lessened in the cost to run the Artificial Analysis Intelligence Index by its high token usage.

Cost to Run Artificial Analysis Intelligence Index

Cost to Run Artificial Analysis Intelligence Index

Intelligence vs Cost to Run Artificial Analysis Intelligence Index

Intelligence vs Cost to Run Artificial Analysis Intelligence Index

Output Tokens Used to Run Artificial Analysis Intelligence Index

Output Tokens Used to Run Artificial Analysis Intelligence Index

Individual evaluation scores, all run independently like-for-like by Artificial Analysis

Intelligence Evaluations

Intelligence Evaluations

See Artificial Analysis for further benchmarks: https://artificialanalysis.ai/models/minimax-m2