August 7, 2025

GPT-5 Benchmarks and Analysis

OpenAI gave us early access to GPT-5: our independent benchmarks verify a new high for AI intelligence. We have tested all four GPT-5 reasoning effort levels, revealing 23x differences in token usage and cost between the 'High' and 'Minimal' options and substantial differences in intelligence

We have run our full suite of eight evaluations independently across all reasoning effort configurations of GPT-5 and are reporting benchmark results for intelligence, token usage, and end-to-end latency.

What OpenAI released: OpenAI has released a single endpoint for GPT-5, but different reasoning efforts offer vastly different intelligence. GPT-5 with reasoning effort "High" reaches a new intelligence frontier, while "Minimal" is near GPT-4.1 level (but more token efficient).

Takeaways from our independent benchmarks:

⚙️ Reasoning effort configuration: GPT-5 offers four reasoning effort configurations: high, medium, low, and minimal. Reasoning effort options steer the model to "think" more or less hard for each query, driving large differences in intelligence, token usage, speed, and cost.

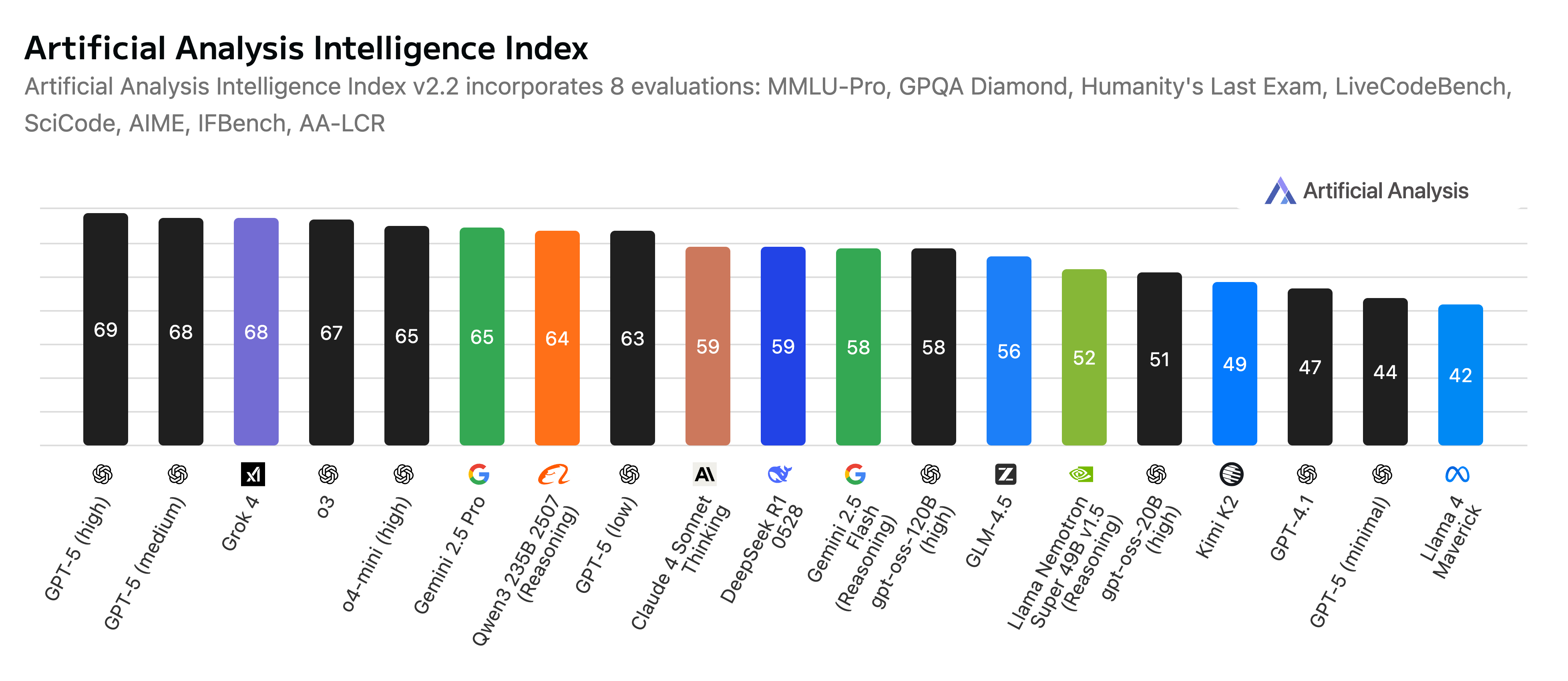

🧠 Intelligence achieved ranges from frontier to GPT-4.1 level: GPT-5 sets a new standard with a score of 68 on our Artificial Analysis Intelligence Index (MMLU-Pro, GPQA Diamond, Humanity's Last Exam, LiveCodeBench, SciCode, AIME, IFBench & AA-LCR) at High reasoning effort. Medium (67) is close to o3, Low (64) sits between DeepSeek R1 and o3, and Minimal (44) is close to GPT-4.1. While High sets a new standard, the increase over o3 is not comparable to the jump from GPT-3 to GPT-4 or GPT-4o to o1.

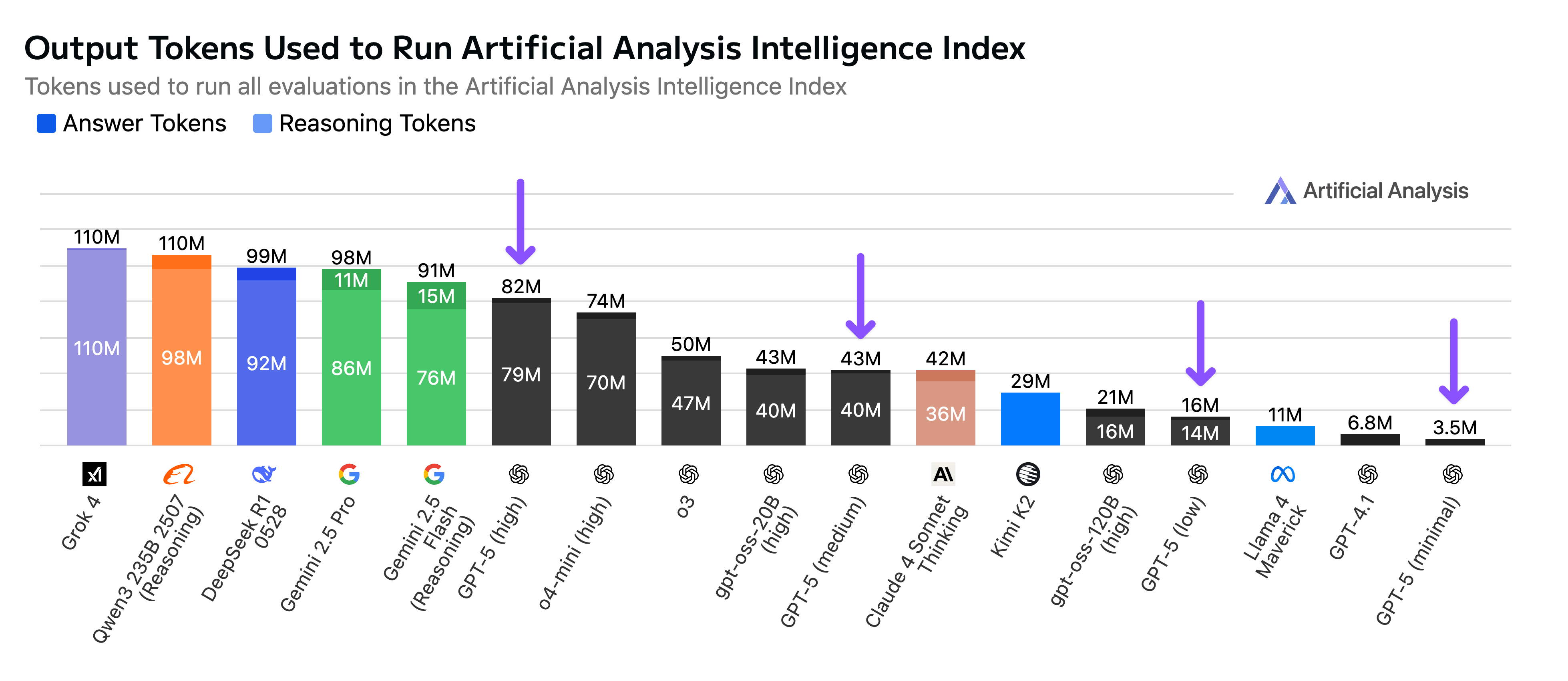

💬 Token usage varies 23x between reasoning efforts: GPT-5 with High reasoning effort used more tokens than o3 (82M vs. 50M) to complete our Index, but still fewer than Gemini 2.5 Pro (98M) and DeepSeek R1 0528 (99M). However, Minimal reasoning effort used only 3.5M tokens which is substantially less than GPT-4.1, making GPT-5 Minimal significantly more token-efficient for similar intelligence.

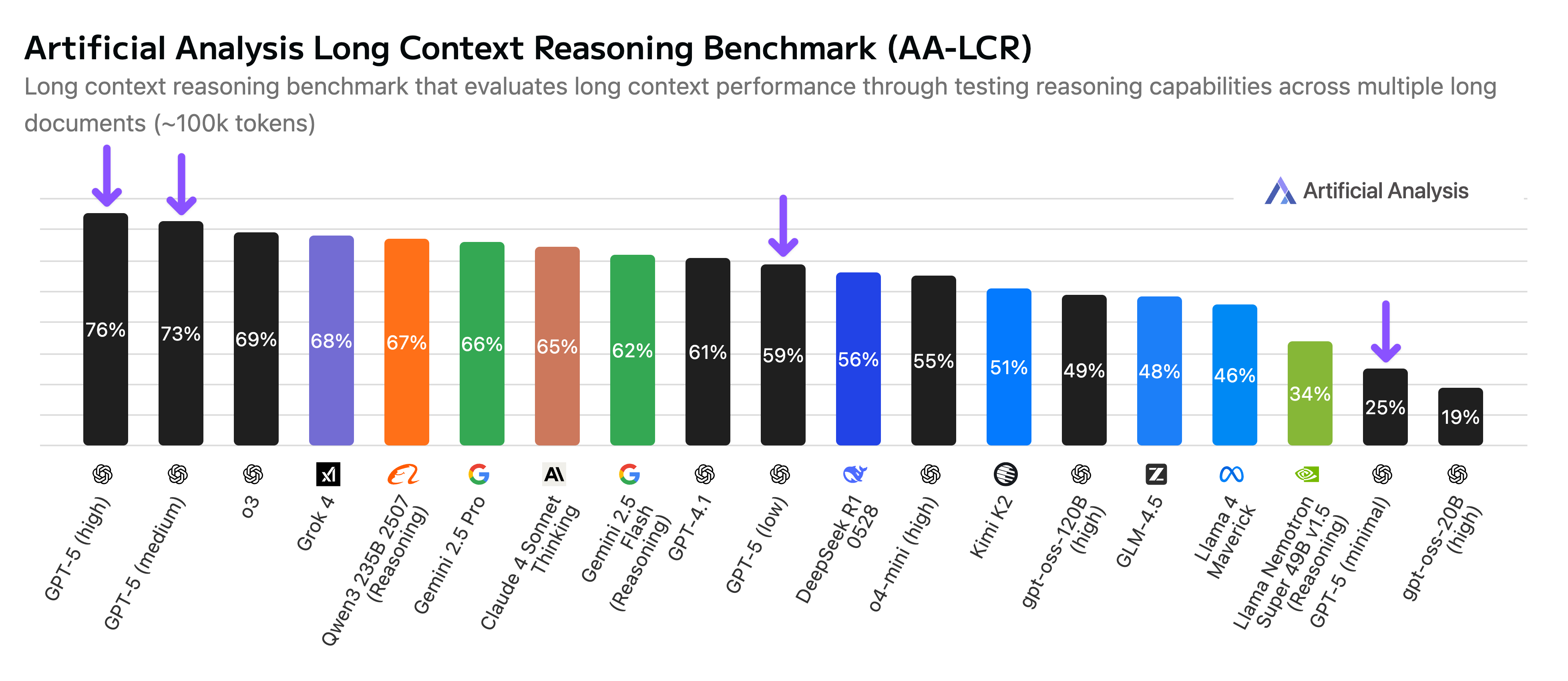

📖 Long Context Reasoning: We released our own Long Context Reasoning (AA-LCR) benchmark earlier this week to test the reasoning capabilities of models across long sequence lengths (sets of documents ~100k tokens in total, measured using the cl100k_base tokenizer). GPT-5 stands out for its performance in AA-LCR, with GPT-5 in both High and Medium reasoning efforts topping the benchmark.

🤖 Agentic Capabilities: OpenAI also commented on improvements across capabilities increasingly important to how AI models are used, including agents (long horizon tool calling). We recently added IFBench to our Intelligence Index to cover instruction following and will be adding further evals to cover agentic tool calling to independently test these capabilities.

📡 Vibe checks: We're testing the personality of the model through MicroEvals on our website which supports running the same prompt across models and comparing results. It's free to use, we'll provide an update with our perspective shortly but feel free to share your own!

Artificial Analysis Intelligence Index

Artificial Analysis Intelligence Index

Token usage (verbosity): GPT-5 with reasoning effort high uses 23X more tokens than with reasoning effort minimal. Though in doing so achieves substantial intelligence gains, between medium and high there is less of an uplift.

Output Tokens Used to Run Intelligence Index

Output Tokens Used to Run Intelligence Index

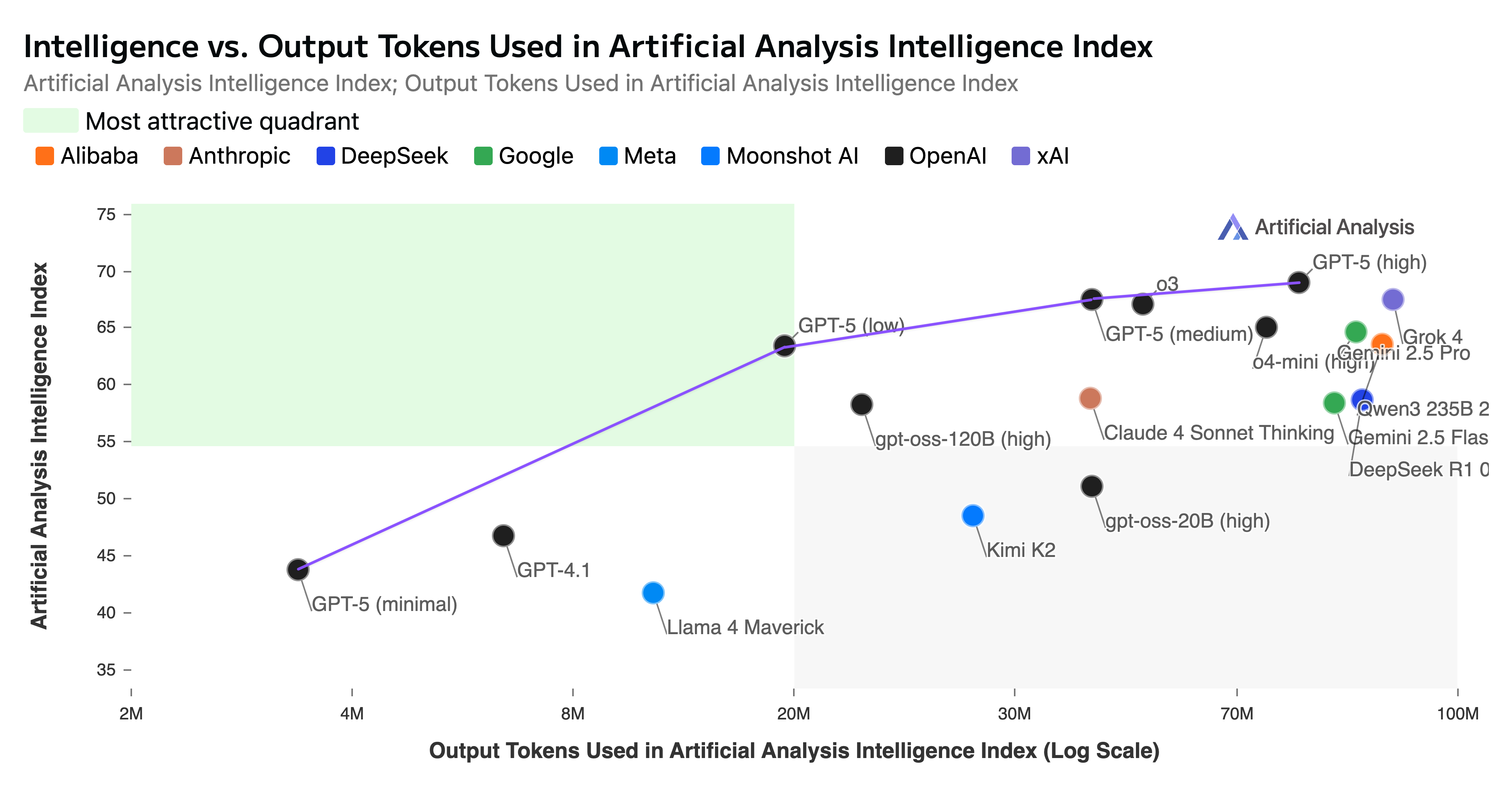

Intelligence vs Output Tokens Used

Intelligence vs Output Tokens Used

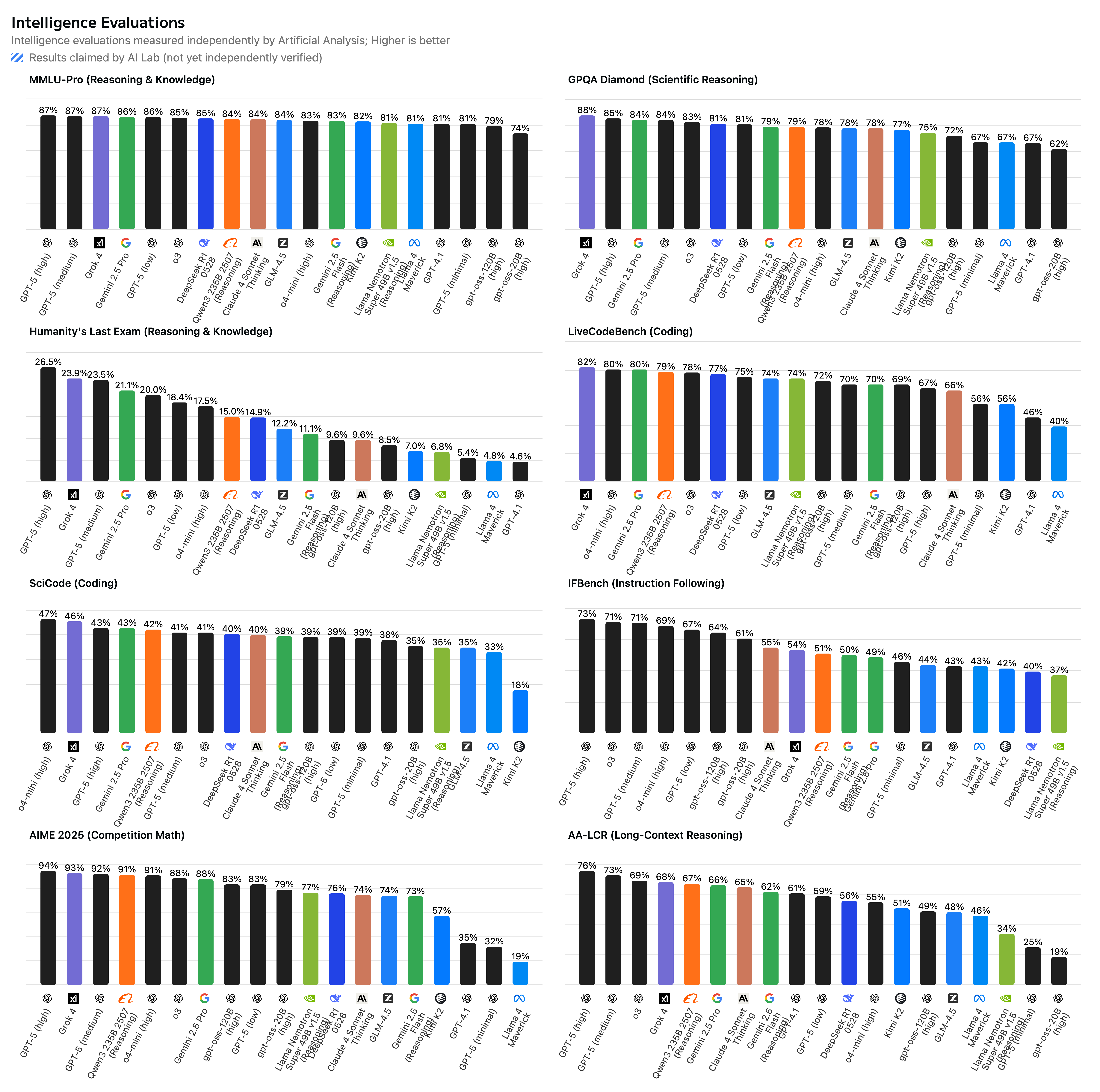

Individual intelligence benchmark results: GPT-5 performs well across our intelligence evaluations.

Intelligence Evaluations

Intelligence Evaluations

Long context reasoning performance: A stand out is long context reasoning performance as shown by our AA-LCR evaluation whereby GPT-5 occupies the #1 and #2 positions.

Artificial Analysis Long Context Reasoning Benchmark

Artificial Analysis Long Context Reasoning Benchmark

Further benchmarks on Artificial Analysis: