August 6, 2025

Analysis of OpenAI's gpt-oss models

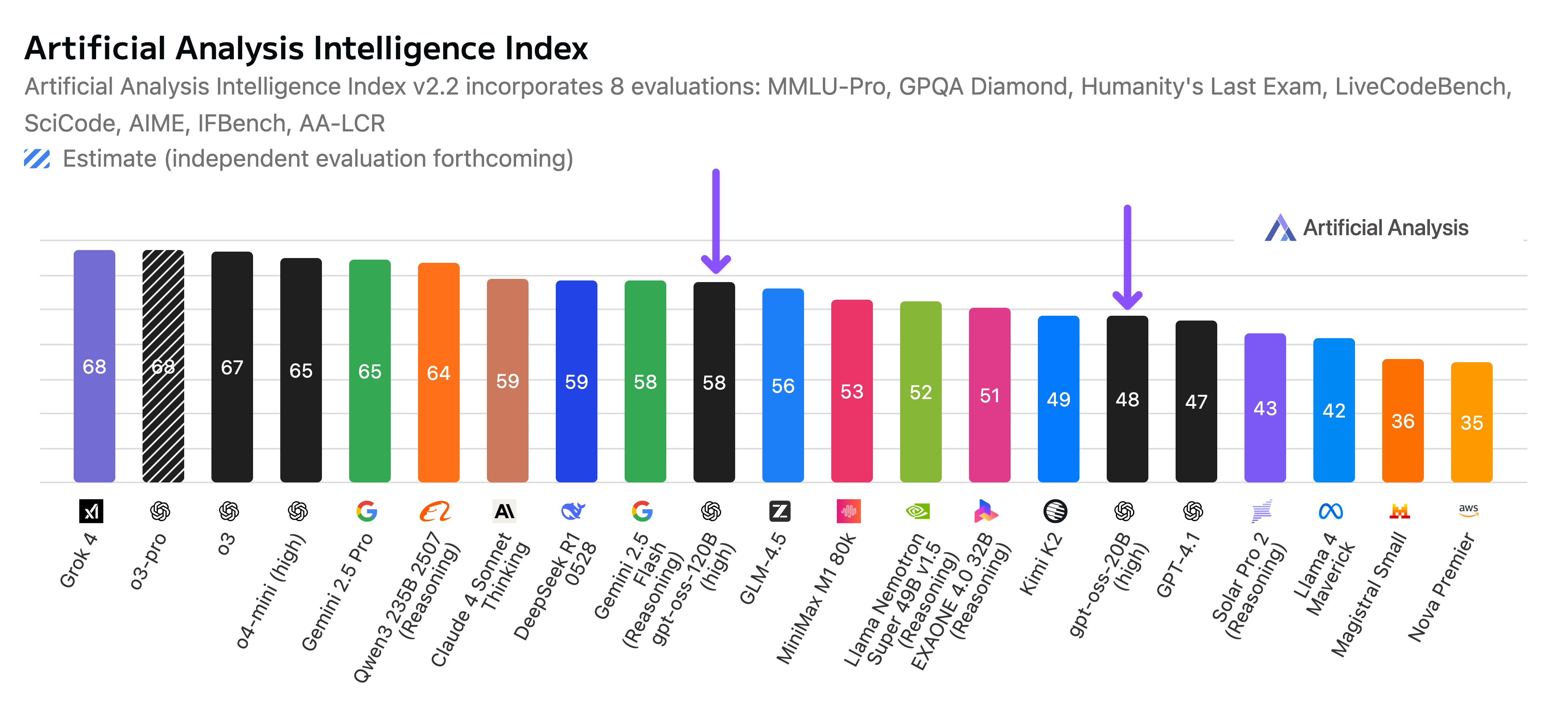

Independent benchmarks of OpenAI's gpt-oss models: gpt-oss-120b is the most intelligent American open weights model, comes behind DeepSeek R1 and Qwen3 235B in intelligence but offers efficiency benefits

OpenAI has released two versions of gpt-oss:

- gpt-oss-120b (117B total parameters, 5.1B active parameters): Intelligence Index score of 58

- gpt-oss-20b (20.9B total parameters, 3.6B active parameters): Intelligence Index score of 48

Size & Deployment

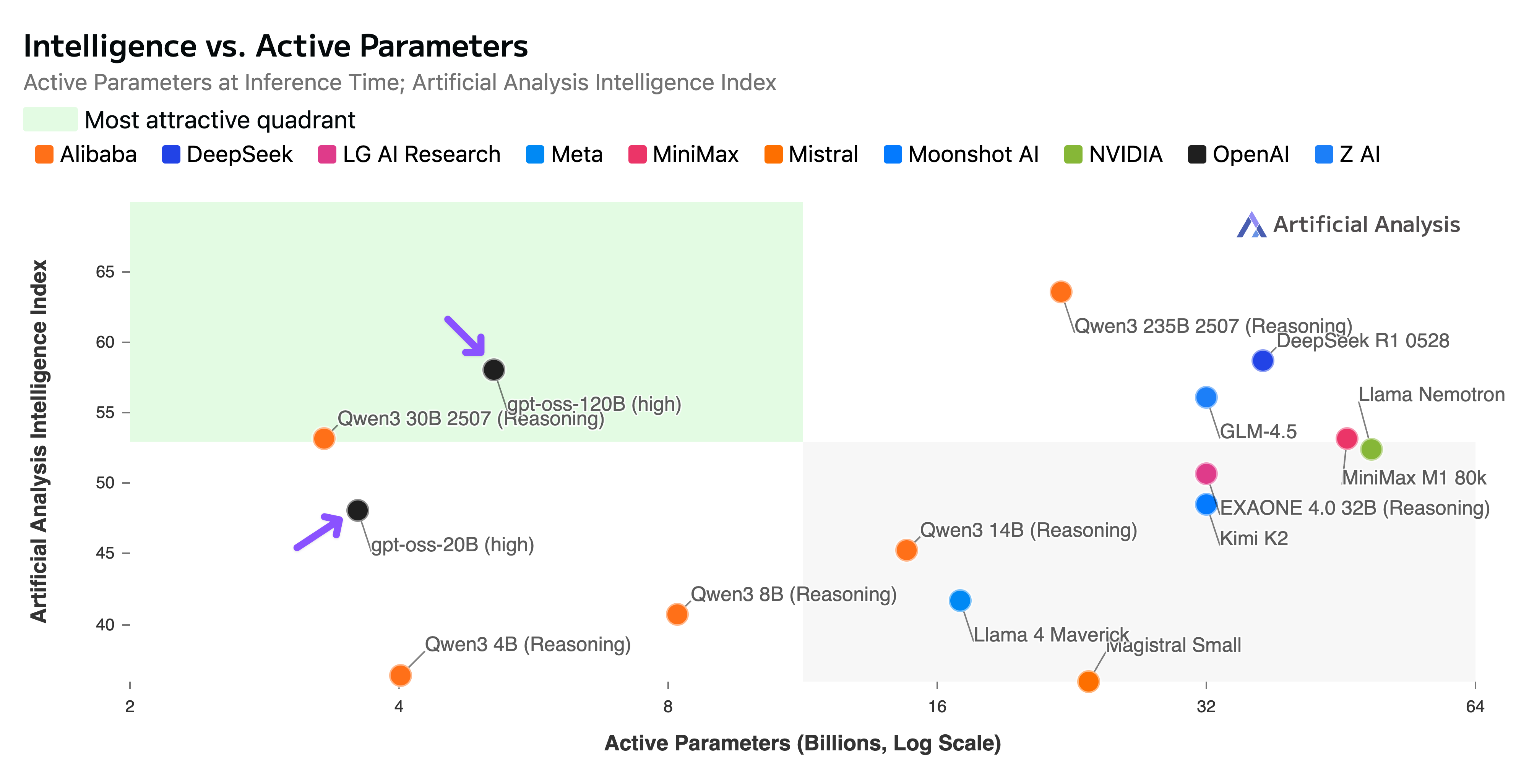

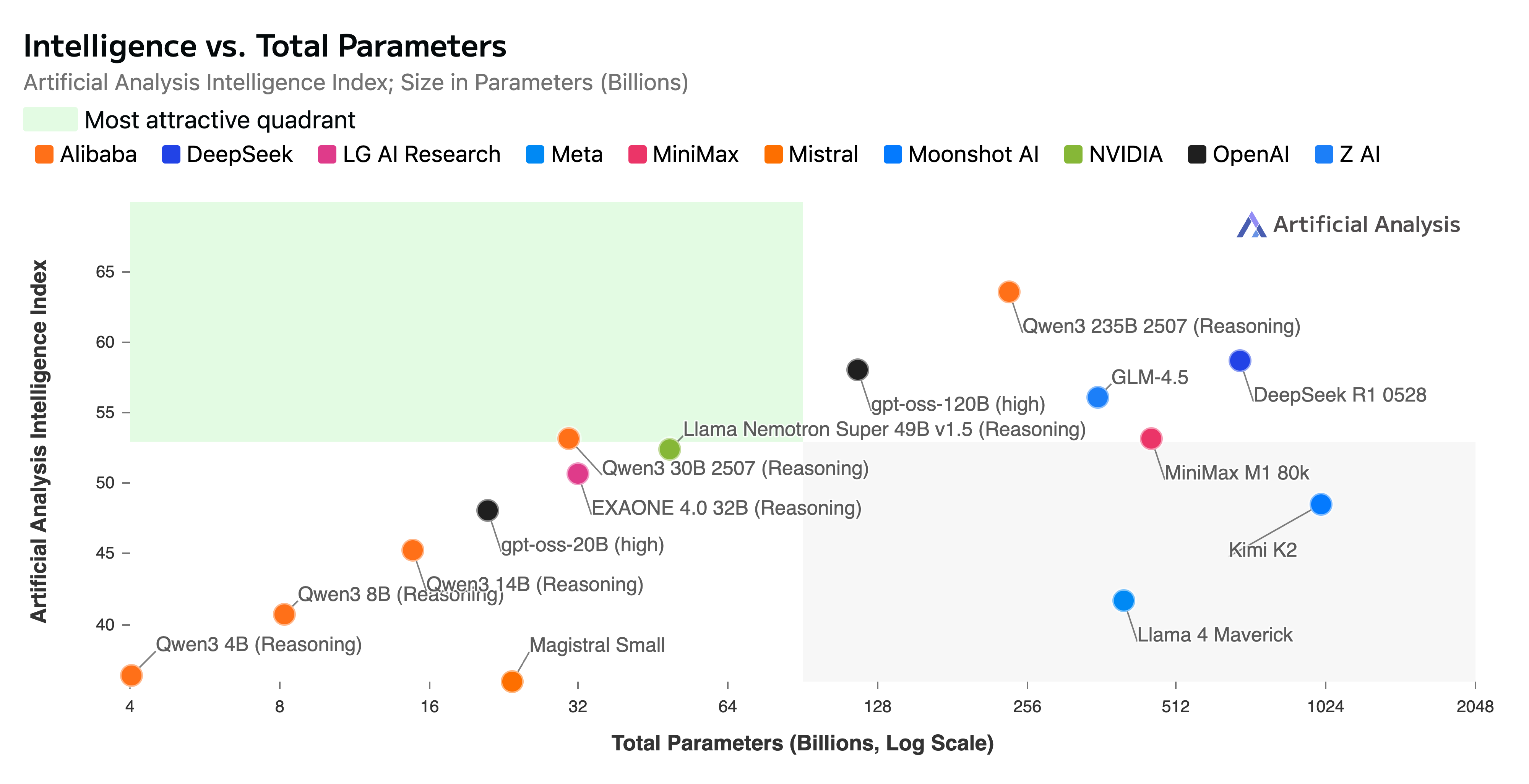

OpenAI has released both models in MXFP4 precision: gpt-oss-120b comes in at just 60.8GB and gpt-oss-20b just 12.8GB. This means that the 120B can be run in its native precision on a single NVIDIA H100, and the 20B can be run easily on a consumer GPU or laptop with >16GB of RAM. Additionally, the relatively small proportion of active parameters will contribute to their efficiency and speed for inference: the 5.1B active parameters of the 120B model can be contrasted with Llama 4 Scout's 109B total parameters and 17B active (a lot less sparse). This makes it possible to get dozens of output tokens/s for the 20B on recent MacBooks.

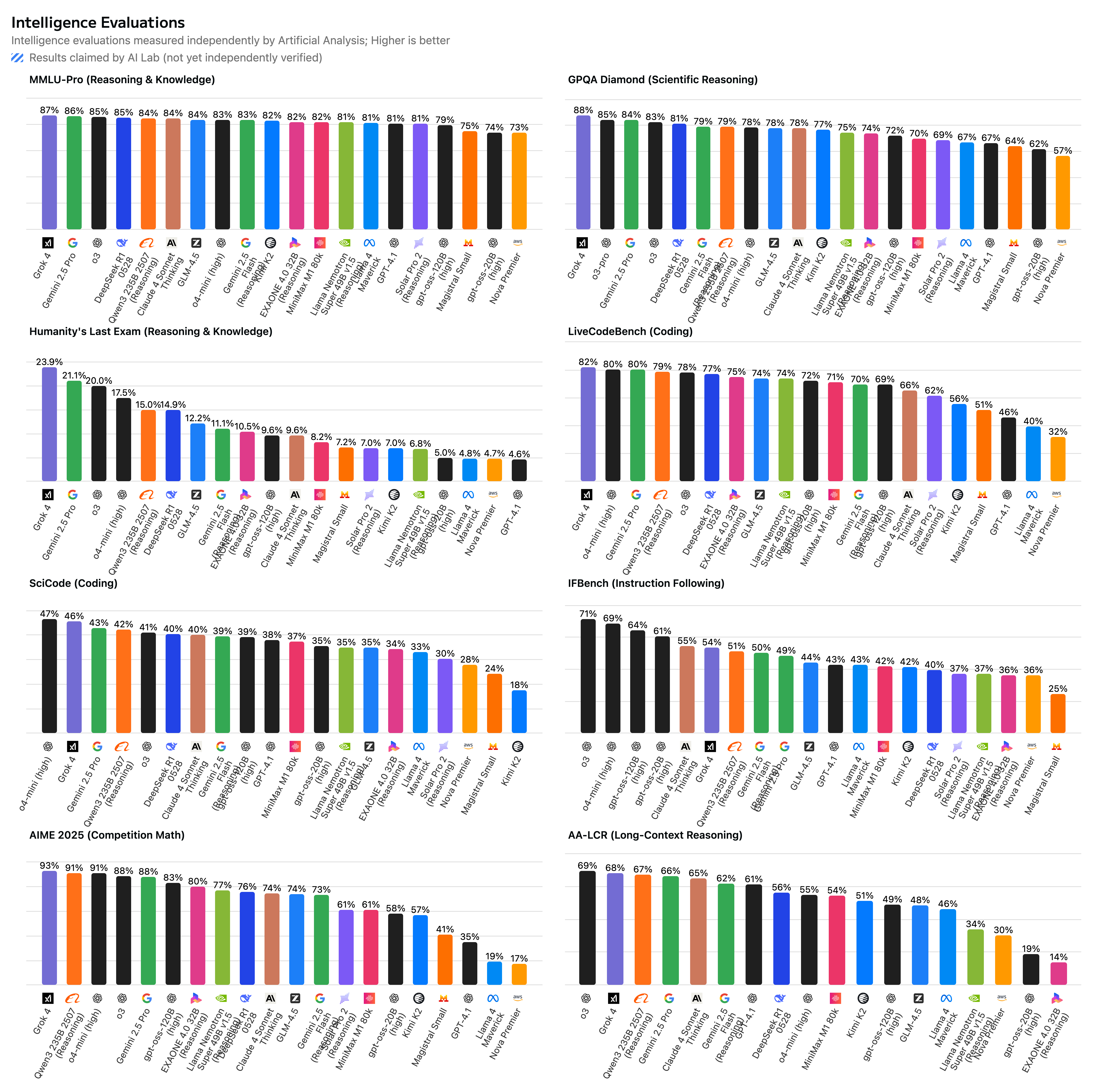

Intelligence

Both models score extremely well for their size and sparsity. We're seeing the 120B beat o3-mini but come in behind o4-mini and o3. The 120B is the most intelligent model that can be run on a single H100 and the 20B is the most intelligent model that can be run on a consumer GPU. Both models appear to place similarly across most of our evals, indicating no particular areas of weakness.

Comparison to Other Open Weights Models

While the larger gpt-oss-120b does not come in above DeepSeek R1 0528's score of 59 or Qwen3 235B 2507s score of 64, it is notable that it is significantly smaller in both total and active parameters than both of those models. DeepSeek R1 has 671B total parameters and 37B active parameters, and is released natively in FP8 precision, making its total file size (and memory requirements) over 10x larger than gpt-oss-120b. Both gpt-oss-120b and 20b are text-only models (similar to competing models from DeepSeek, Alibaba and others).

Architecture

The MoE architecture appears fairly standard. The MoE router selects the top 4 experts for each token generation. The 120B has 36 layers and 20B has 24 layers. Each layer has 64 query heads, uses Grouped Query Attention with 8 KV heads. Rotary embeddings and YaRN are used to extend context window to 128k. The 120B model activates 4.4% of total parameters per forward pass, whereas the 20B model activates 17.2% of total parameters. This may indicate that OpenAI's perspective is that a higher degree of sparsity is optimal for larger models. It has been widely speculated that most top models from frontier labs have been sparse MoEs for most releases since GPT-4.

API Providers

A number of inference providers have been quick to launch endpoints. We are currently benchmarking Groq, Cerebras, Fireworks and TogetherAI on Artificial Analysis and will add more providers as they launch endpoints.

Pricing

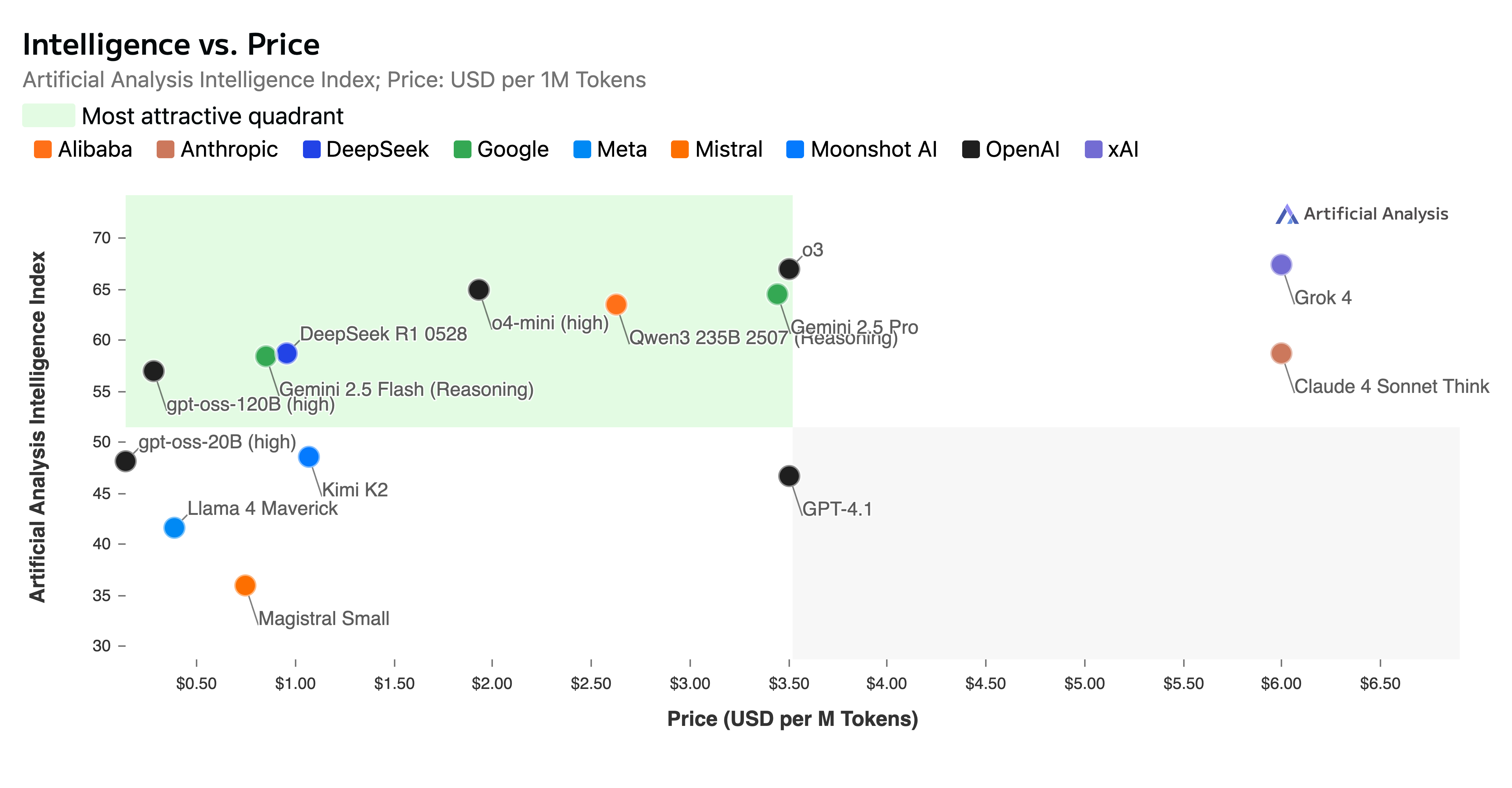

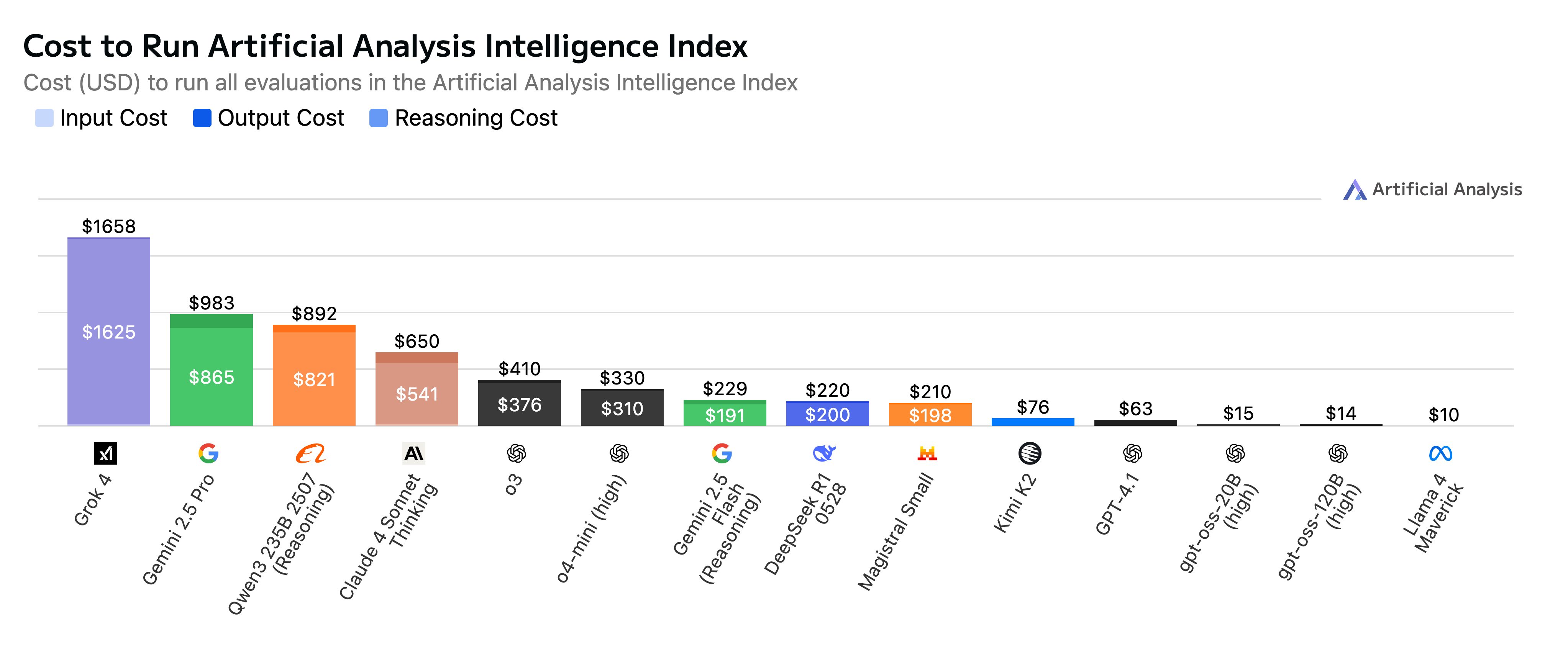

We're tracking median pricing across API providers of $0.15/$0.69 per million input/output tokens for the 120B and $0.08/$0.35 for the 20B. These prices put the 120B close to 10x cheaper than OpenAI's proprietary APIs for o4-mini ($1.1/$4.4) and o3 ($2/$8).

License

Apache 2.0 license - very permissive!

Analysis Charts

Below are detailed analyses with comprehensive charts:

Artificial Analysis Intelligence Index

Artificial Analysis Intelligence Index

Intelligence vs Active Parameters

Intelligence vs Active Parameters

Intelligence vs. Total Parameters: gpt-oss-120B is the most intelligent model that can fit on a single H100 GPU in its native precision.

Intelligence vs Total Parameters

Intelligence vs Total Parameters

Pricing: Across the API providers who have launched day one API coverage, we're seeing median prices of $0.15/$0.69 per million input/output tokens for the 120B and $0.08/$0.35 for the 20B. This makes both gpt-oss models highly cost efficient options for developers.

Intelligence vs Price

Intelligence vs Price

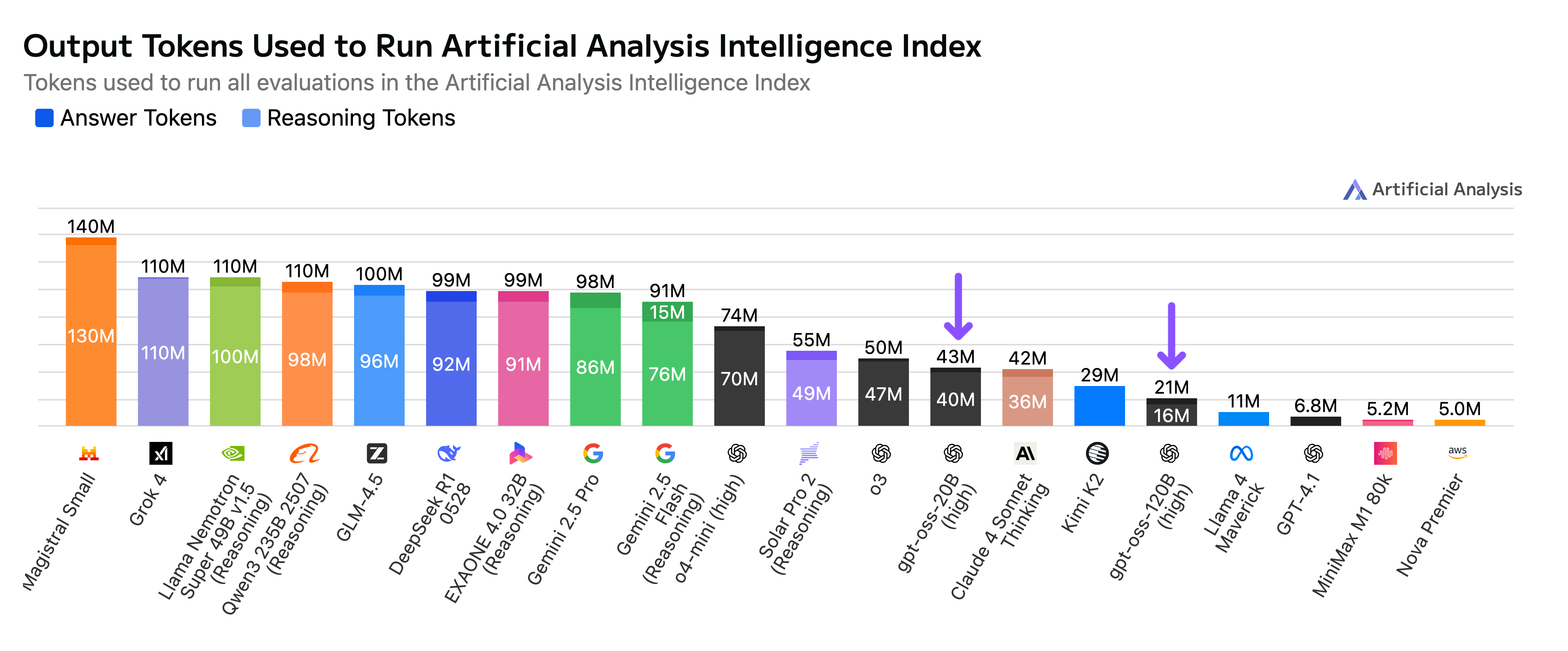

Output token usage: Relative to other reasoning models, both models are quite efficient even in their 'high' reasoning modes, particularly gpt-oss-120b which used only 21M tokens to run our Artificial Analysis Intelligence Index benchmarks. This is 1/4 of the tokens o4-mini (high) took to run the same benchmarks, 1/2 of o3 and less than Kimi K2 (a non-reasoning model).

Output Tokens Used

Output Tokens Used

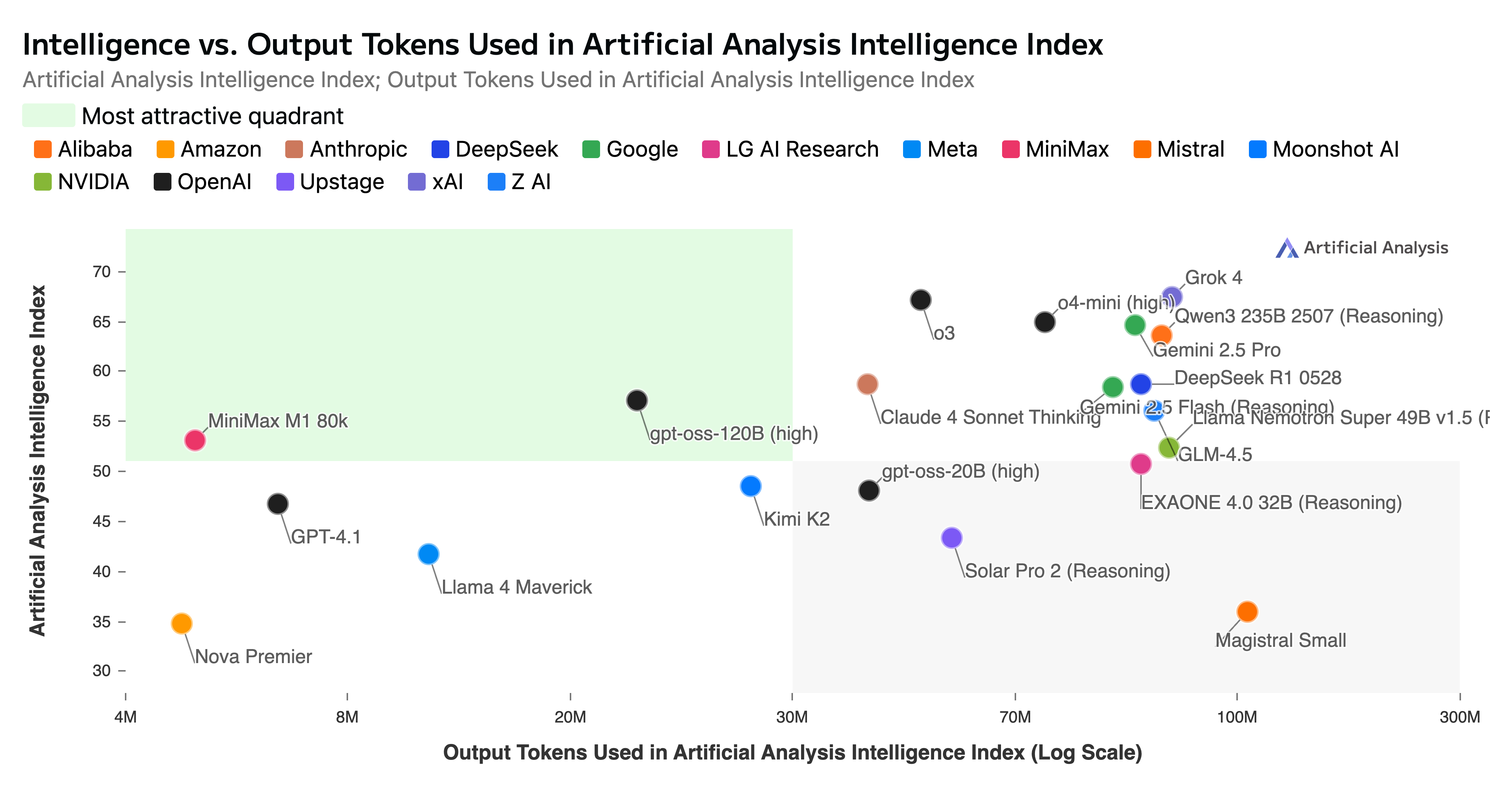

Intelligence vs Output Tokens Used

Intelligence vs Output Tokens Used

Cost to Run Intelligence Index

Cost to Run Intelligence Index

Individual Evaluation Results

Individual evaluation results from benchmarks we have run independently:

Intelligence Evaluations

Intelligence Evaluations

gpt-oss-120b is now the leading US open weights model. Qwen3 235B from Alibaba is the leading Chinese model and offers greater intelligence, but is much larger in size (235B total parameters, 22B active, vs gpt-oss-120B's 117B total, 5B active)

Open Weights Model Intelligence by Country

Open Weights Model Intelligence by Country

See Artificial Analysis for Further Analysis

Comparisons to other models: https://artificialanalysis.ai/models

Comparisons to other open weights models: https://artificialanalysis.ai/models/open-source

Benchmarks of providers serving gpt-oss-120b: https://artificialanalysis.ai/models/gpt-oss-120b/providers