June 9, 2025

Independent Performance Analysis of Leading GPUs: AMD MI300X, NVIDIA H100, NVIDIA H200

Introduction

In this analysis, we assess the system performance of the AMD MI300X, NVIDIA H100, and NVIDIA H200 systems using the Artificial Analysis System Load Test. The focus of this benchmarking is to compare key performance metrics important to enterprise deployments across various system loads.

Our benchmarking involved conducting the Artificial Analysis System Load Test on NVIDIA 8xH100, 8xH200, and AMD 8xMI300X systems on two leading open-weight large language models: DeepSeek R1 and Llama 4 Maverick.

We were engaged by AMD to conduct benchmarking of the AMD MI300X, and to compare system performance to our benchmarking results for NVIDIA hardware. AMD provided access to a system on DigitalOcean. All benchmarking was conducted according to Artificial Analysis' independently developed and publicly documented methodology.

Detailed methodology, configuration parameters, and performance data are included throughout this analysis to provide transparency regarding the results and to support replication.

Benchmarking Methodology: Artificial Analysis System Load Test (AA-SLT)

All performance results in this analysis are powered by the Artificial Analysis System Load Test (AA-SLT), our standardized benchmarking framework designed to evaluate AI hardware performance under realistic load conditions. AA-SLT focuses on measuring how systems perform under increasing load to measure how system throughput and per query performance scale. Full methodology details are disclosed on our website and a summary is included at the bottom of this article.

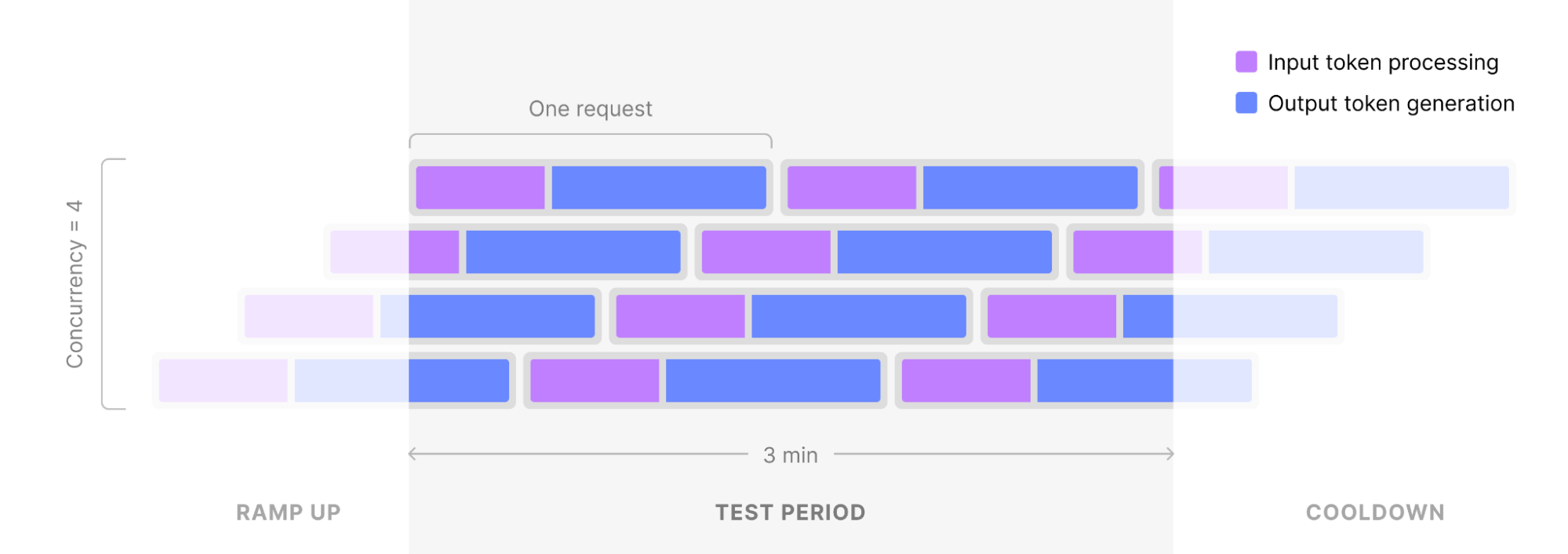

Illustrative overview of the Artificial Analysis System Load Test (example shows 4 concurrent requests)

Illustrative overview of the Artificial Analysis System Load Test (example shows 4 concurrent requests)

Benchmarking Results

Across all systems, benchmarking results demonstrate a clear trade-off between per-query latency, and aggregate system output throughput or the number of queries a system supports. NVIDIA H100 & H200 systems typically delivered faster output speeds and lower per-query end-to-end latencies at lower concurrencies, while AMD MI300X systems achieved higher peak system throughput at high concurrency. Output speeds between NVIDIA and AMD accelerators were comparable at medium concurrency levels.

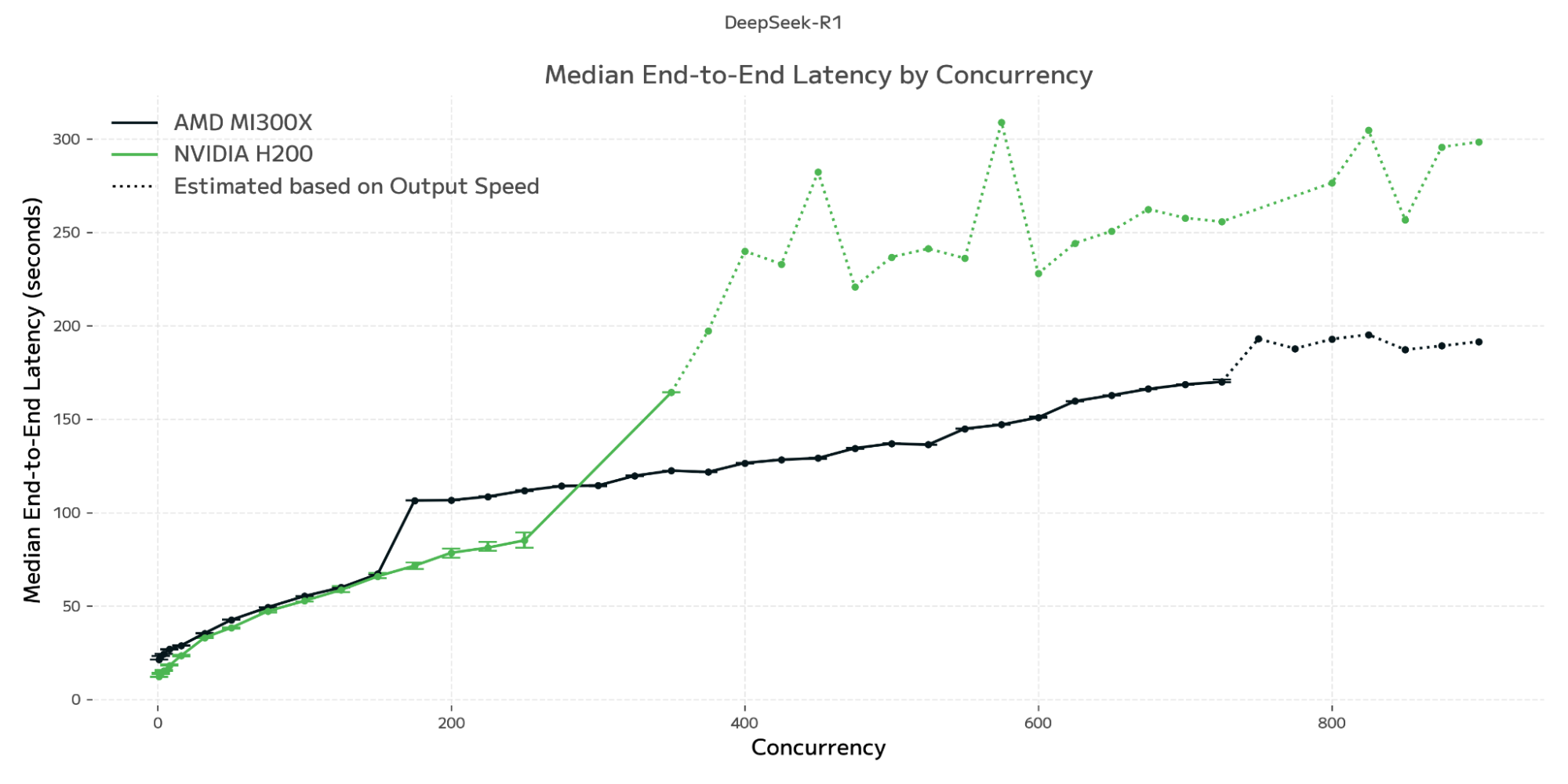

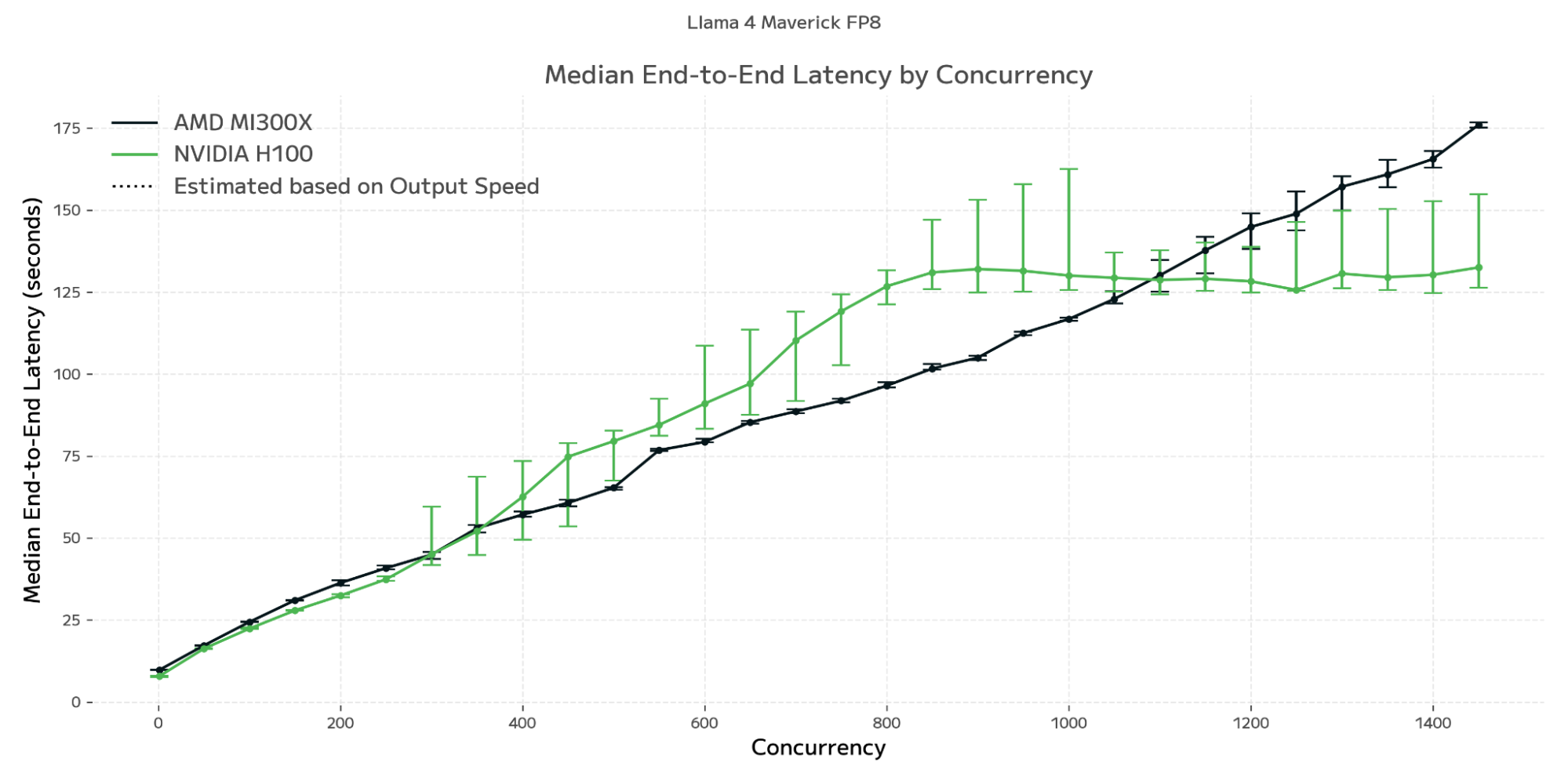

AMD MI300X and NVIDIA H100 & H200 offer similar end-to-end latencies at lower number of concurrent queries. However, the AMD MI300X offers better end-to-end latencies at higher workloads, especially when considering the share of queries that received a response in the test period. NVIDIA's accelerators offer advantages for latency sensitive workloads, while AMD's MI300X offers advantages in terms of efficiency such as large batch size workloads.

The cost per token delivered by a system can be calculated by dividing a cost metric by system throughput. At low concurrency, NVIDIA accelerators offer a slightly lower implied cost per token driven by slightly higher system throughput. At higher concurrency, AMD MI300X offers a lower cost per token.

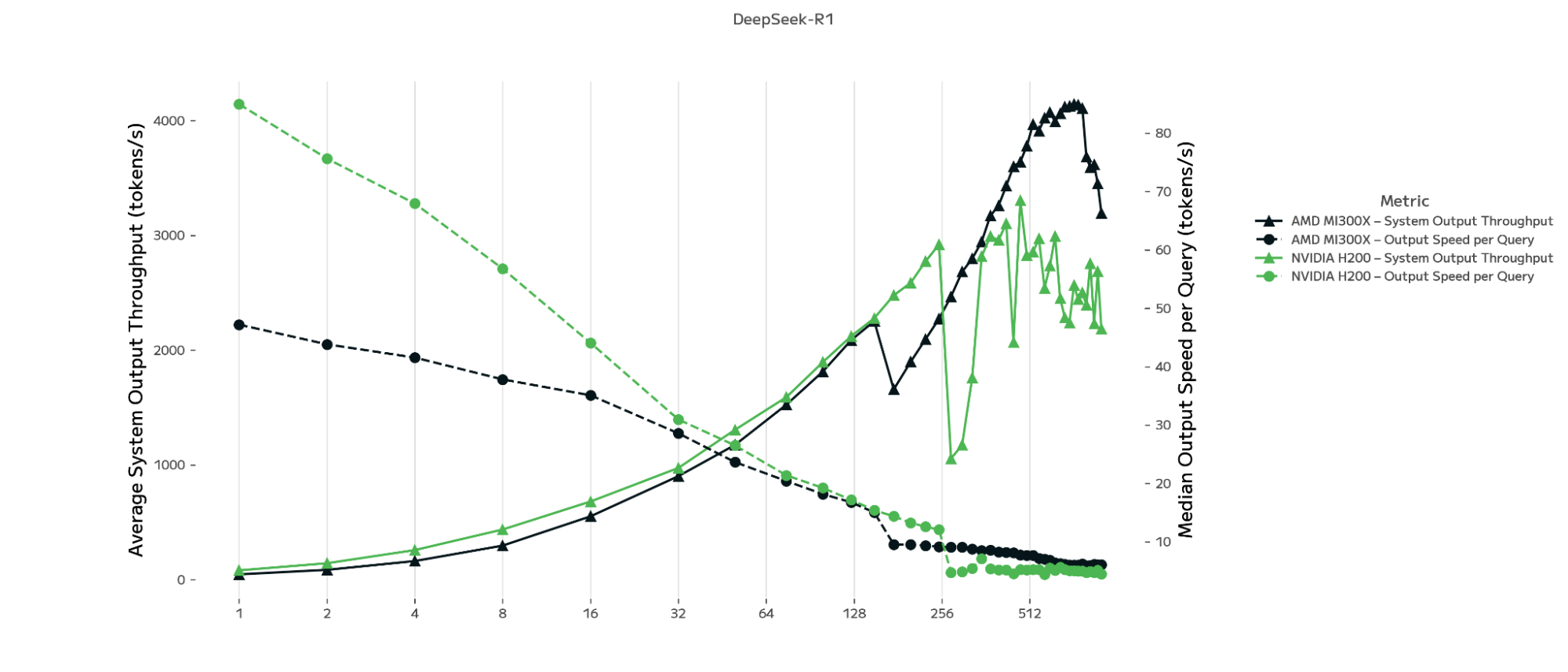

DeepSeek R1: Average System Throughput and Median Output Speed vs. Concurrency

DeepSeek R1: Average System Throughput and Median Output Speed vs. Concurrency

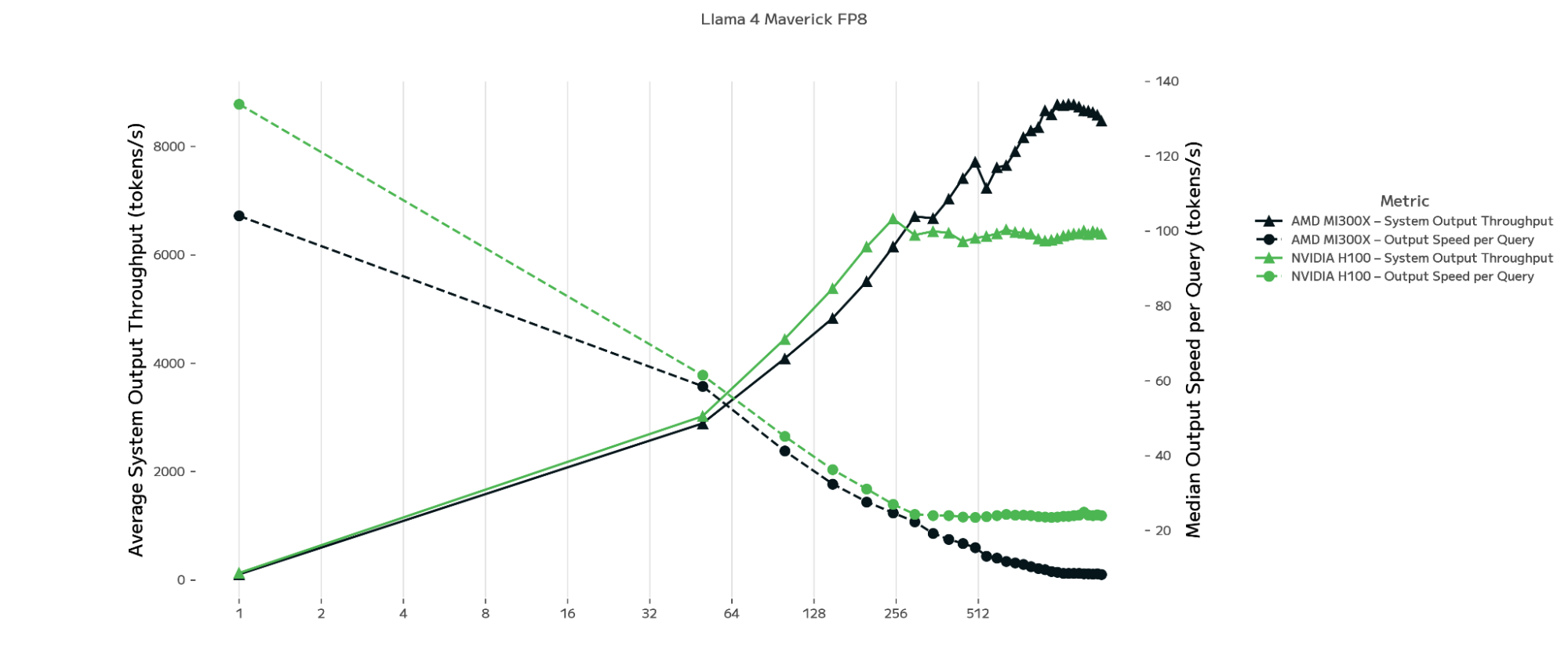

Llama 4 Maverick: Average System Throughput and Median Output Speed vs. Concurrency

Llama 4 Maverick: Average System Throughput and Median Output Speed vs. Concurrency

Detailed Benchmarking Results

Lower concurrency performance & latency sensitive workloads:

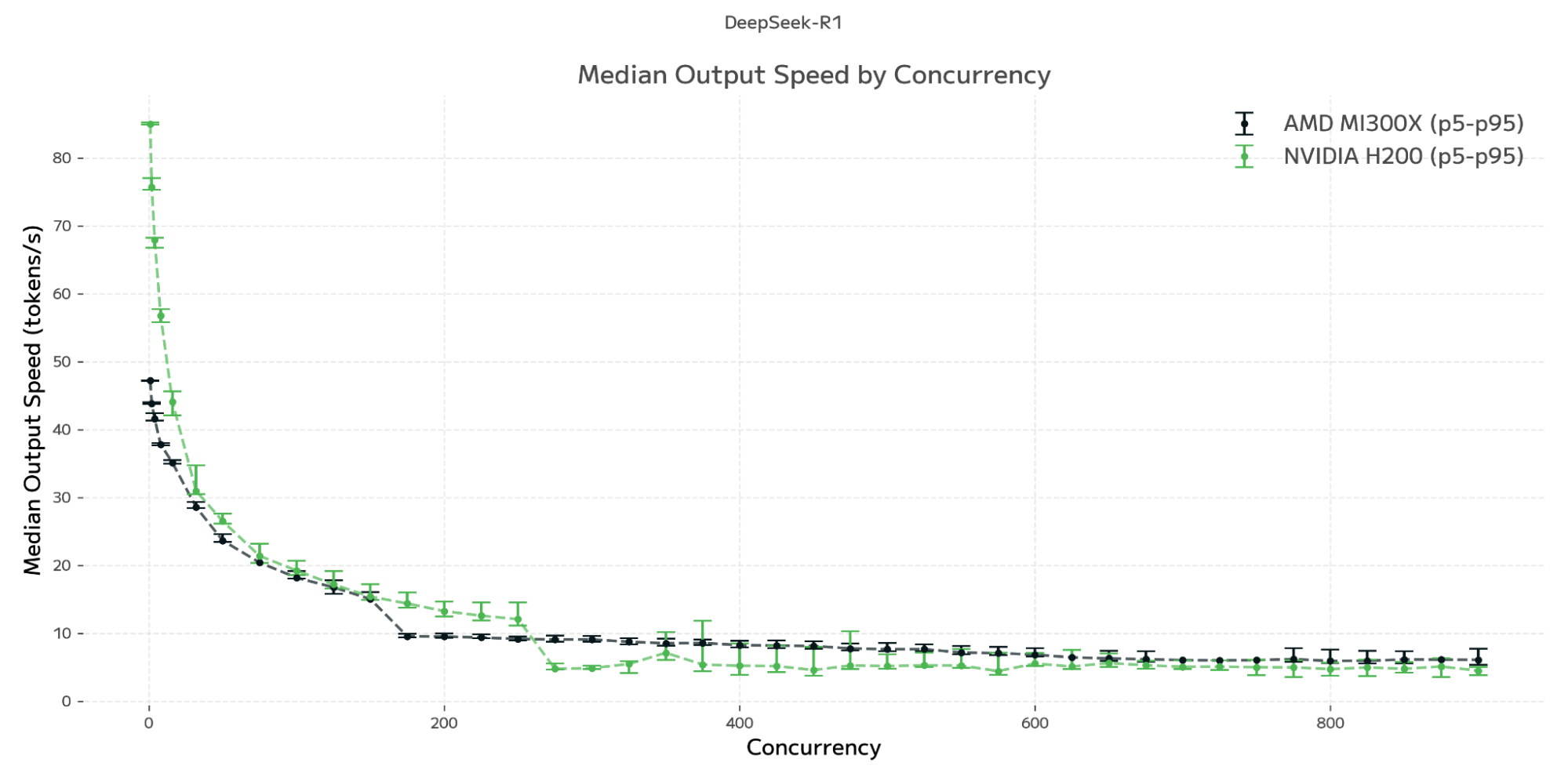

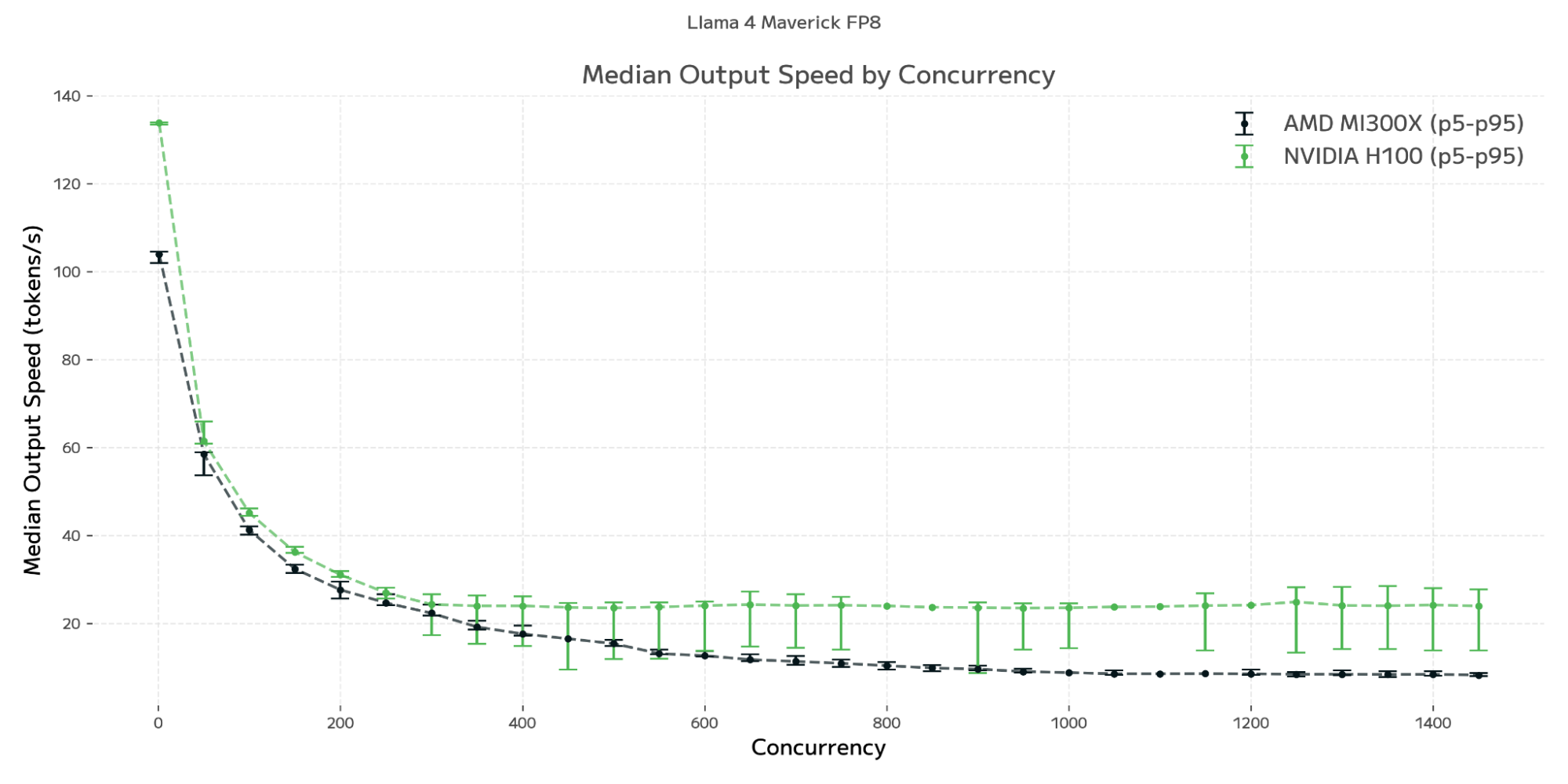

- At lower concurrencies (<~250 concurrent queries), NVIDIA H100 & H200 systems demonstrate marginally faster average per-query output speeds, lower average end-to-end latencies, and higher system throughput than the AMD MI300X system across our benchmarking of both DeepSeek R1 and Meta Llama 4 Maverick.

- DeepSeek R1: At 16 concurrent queries, the NVIDIA H200 system achieved a median speed per query of ~45 output tokens/s while maintaining a system throughput of ~600 output tokens/s, while the AMD MI300X system achieved ~35 output tokens/s per query and a system throughput of ~500 output tokens/s.

- Llama 4 Maverick: At 16 concurrent queries, the NVIDIA H100 system achieved a median speed per query of ~90 output tokens/s while maintaining a system throughput of ~2,150 output tokens/s, while the AMD MI300X system achieved ~70 output tokens/s per query and a system throughput of ~2,100 output tokens/s.

- As such, the NVIDIA systems offer advantages for latency-sensitive workloads. These can include chatbots, real-time services, and some agentic applications.

High concurrency performance:

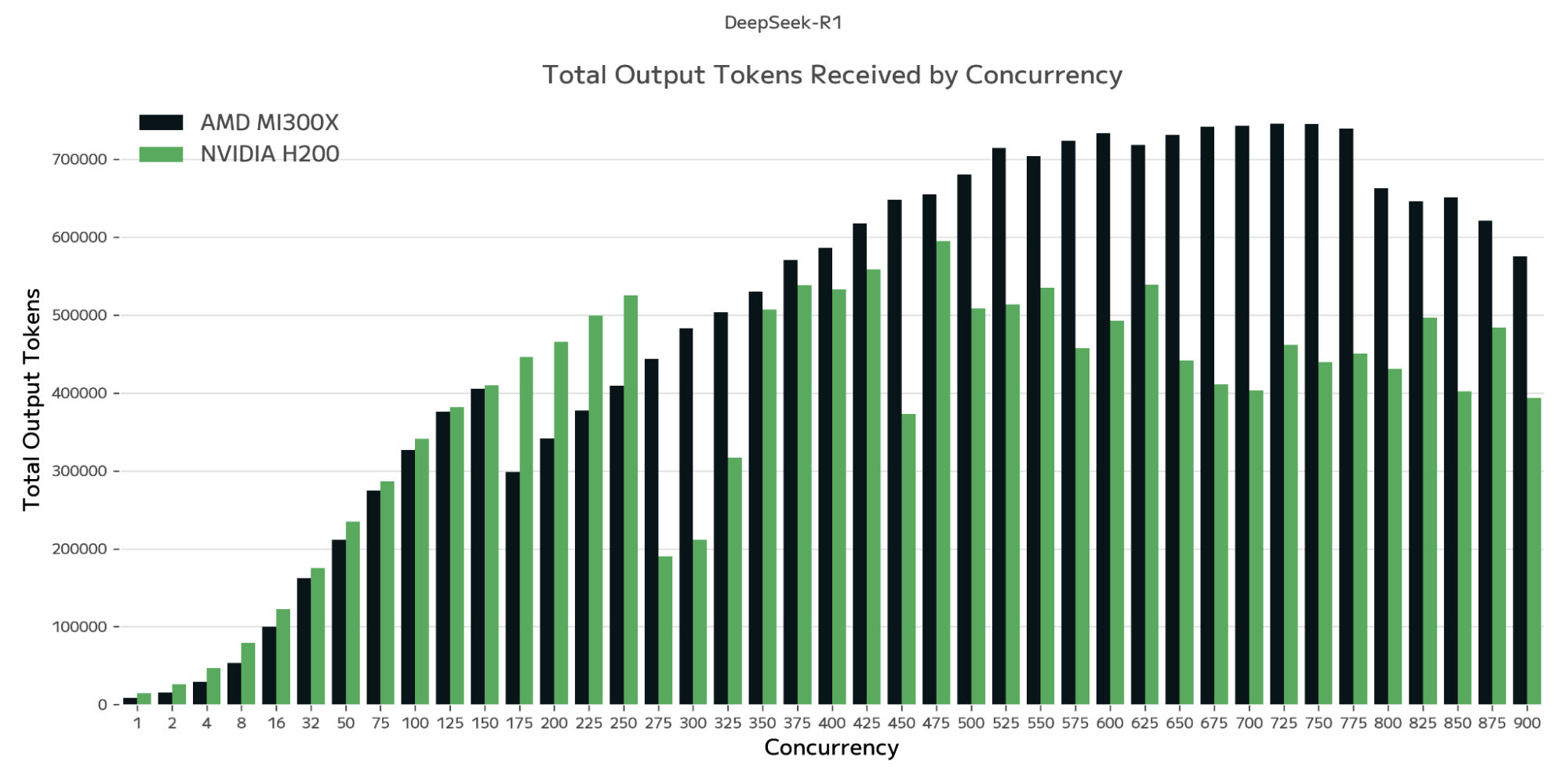

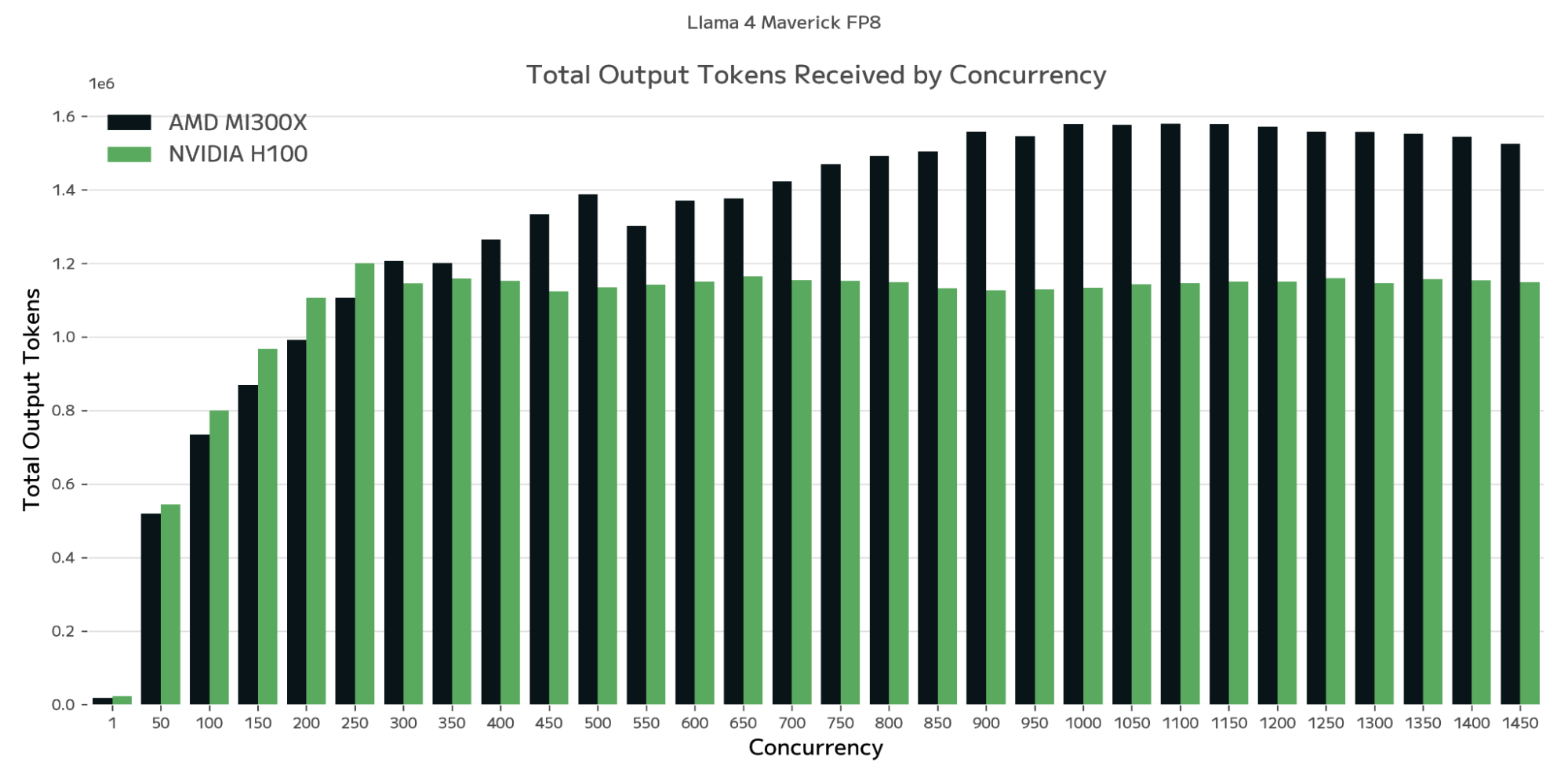

- The AMD MI300X system achieved 25-35% higher peak system output throughput compared to NVIDIA systems.

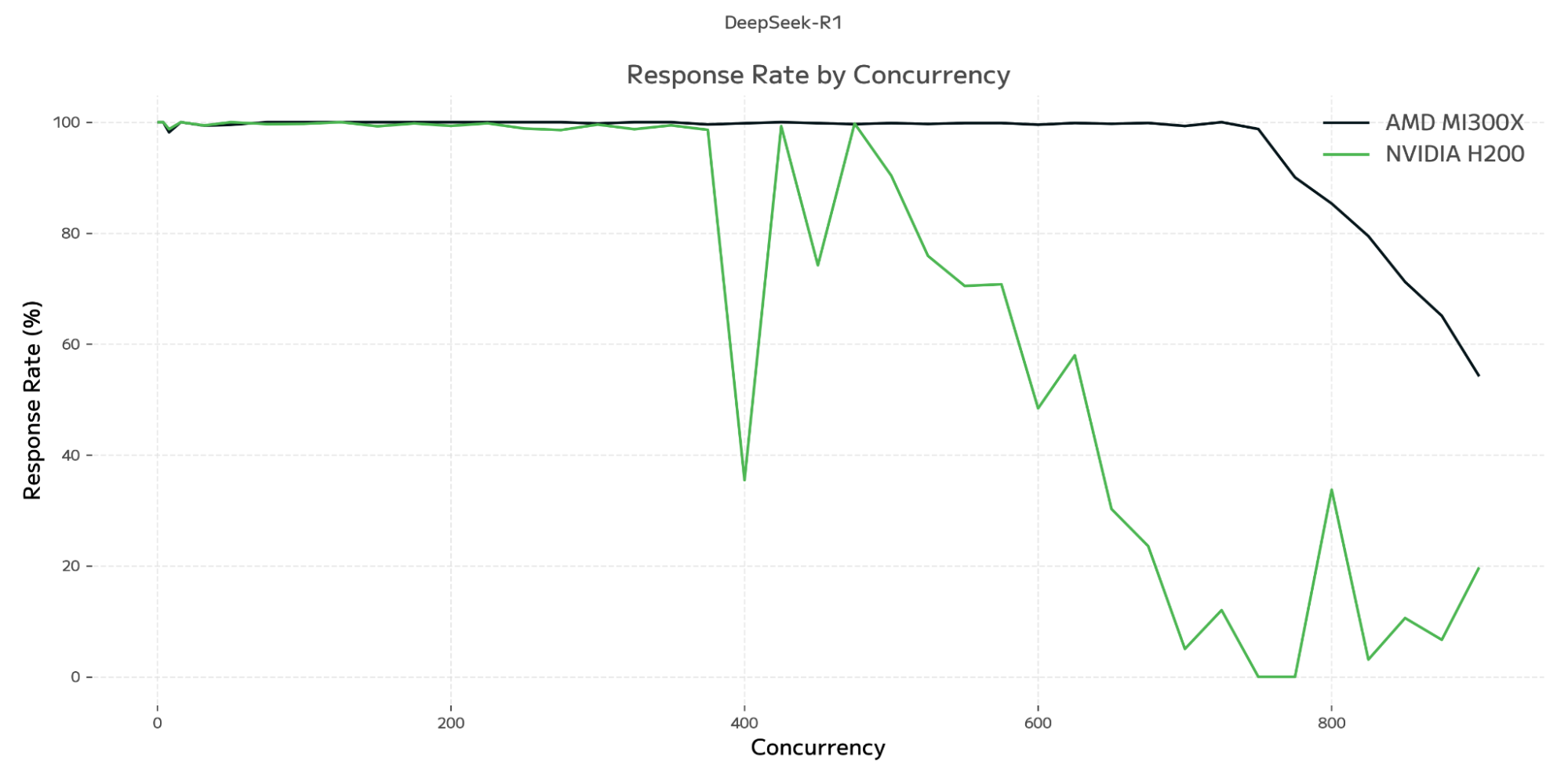

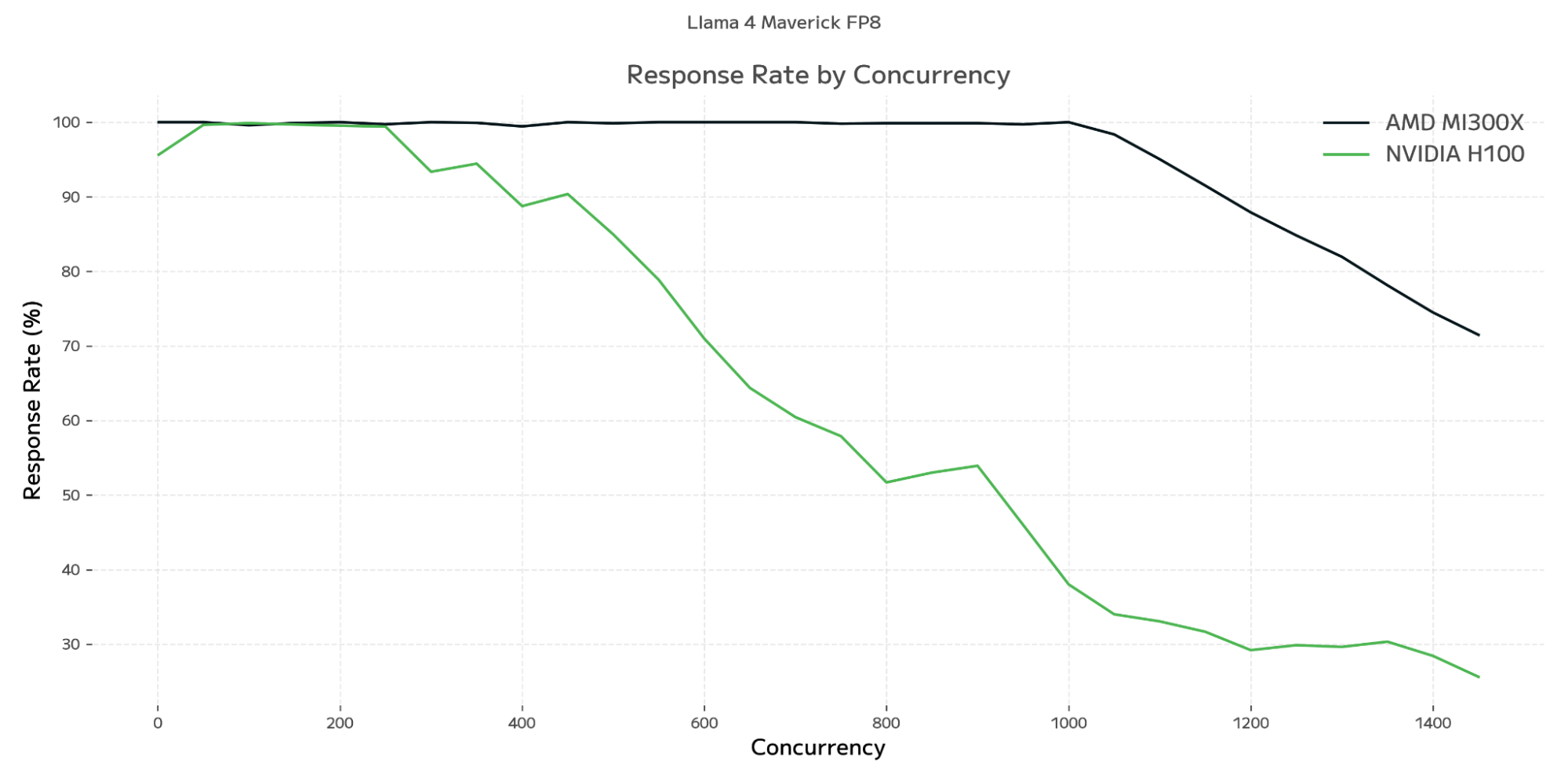

- The AMD MI300X system sustained near-perfect response rates to queries (ie. proportion of queries sent that we received >0 output tokens for within the 3 minute test window) all the way to its measured peak throughput, while NVIDIA H200 systems saw degradation in response rates as it scaled to peak system output throughput.

- DeepSeek R1: The AMD MI300X system achieved peak system output throughput of ~4,100 output tokens/s at ~750 concurrent queries, while the NVIDIA H200 system achieved a peak output system throughput of ~3,250 output tokens/s at ~475 concurrent queries.

- Llama 4 Maverick: The AMD MI300X system achieved peak system output throughput of ~8,500 output tokens/s at 1,000 concurrent queries, while the NVIDIA H100 system achieved a peak output system throughput of ~6,500 output tokens/s at 250 concurrent queries.

- As such, the AMD MI300X system offers advantages for use-cases which seek to optimize for efficiency. This can include batch workloads that process large scale data and workloads which seek to optimize for cost. The higher system output throughput observed on the AMD MI300X translates directly to a lower implied cost per token.

Accuracy:

- Our accuracy benchmarking methodology involves running evaluations like-for-like across systems.

- We compared results for GPQA 64X, MMLU-Pro 16X and MATH-500 16X across AMD and NVIDIA systems. We did not find any statistically significant difference in accuracy, assessed to our standard 95% confidence interval.

- We conduct a number of repeats of evaluations to control for variance when conducting the evaluations which occurs due to variance in responses of the LLMs to the same questions. Single evaluation runs are insufficient for comparing accuracy between systems as evaluations can vary >1ppt between like-for-like runs.

DeepSeek R1 (Jan 2025)

DeepSeek R1: End-to-End Latency vs. Concurrency

DeepSeek R1: End-to-End Latency vs. Concurrency

DeepSeek R1: Output Speed vs. Concurrency

DeepSeek R1: Output Speed vs. Concurrency

DeepSeek R1: Response Rate vs. Concurrency

DeepSeek R1: Response Rate vs. Concurrency

DeepSeek R1: Throughput vs. Concurrency

DeepSeek R1: Throughput vs. Concurrency

Llama 4 Maverick

Llama 4 Maverick: End-to-End Latency vs. Concurrency

Llama 4 Maverick: End-to-End Latency vs. Concurrency

Llama 4 Maverick: Output Speed vs. Concurrency

Llama 4 Maverick: Output Speed vs. Concurrency

Llama 4 Maverick: Response Rate vs. Concurrency

Llama 4 Maverick: Response Rate vs. Concurrency

Llama 4 Maverick: Throughput vs. Concurrency

Llama 4 Maverick: Throughput vs. Concurrency

Benchmarking Methodology

The Artificial Analysis System Load Test employs a concurrency-based benchmarking approach.

The benchmark measures system performance by maintaining fixed numbers of parallel queries sent to the system during the testing phase. Once each individual query finishes, another query is immediately sent to the machine. This ensures the system is benchmarked at a stable load while also minimizing test variance.

The aggregate amount of tokens received from the system during each phase is measured, and contributes to our system output throughput metric. Per query performance is also measured and these contribute to our per query metrics (usually represented as a median or mean).

The benchmark is conducted using a phased approach whereby each benchmarking phase is conducted for 3 minutes and the number of concurrent queries is scaled with each subsequent benchmarking phase. The benchmarking continues until the system's system output throughput ceiling is reached in that with higher concurrency no additional extra tokens are received compared to prior periods.

Key technical specifications:

- Phase duration: 3 minutes (excludes ramp-up and cool-down periods)

- Concurrency levels: 1, 2, 4, 8, 16, 32, 64, then in increments of 50 until system output throughput ceiling plateaus

- Workload shape: 1,000 Input Tokens, 1,000 Output Tokens

- Streaming: Benchmarking is conducted with streaming on

Key metrics measured:

- System Output Throughput: Average aggregate output tokens per second across all concurrent requests over the benchmarking phase

- Response rate: Proportion of queries sent during the benchmarking phase that received responses (at least 1 output token)

- End-to-End Latency: End-to-end response time for each query from time at query sent

- Output Speed: Output tokens per second after first token received for each query

Benchmarking Specifications

DeepSeek R1 (Jan 2025 version)

| Attribute | AMD MI300X | NVIDIA H200 |

|---|---|---|

| Inference Engine | SGLang | SGLang ≥ 0.4.6.post4 |

| Model Quantization | FP8 | FP8 |

| Operating System | Ubuntu 22.04.5 LTS | Ubuntu 22.04.5 LTS |

| Model Framework | PyTorch 2.6.0 | PyTorch 2.8.0 |

| GPU Acceleration Framework | ROCM-SMI 3.0.0+03a4530 | CUDA 12.8.1 |

| Inference Engine Arguments | `--tp 8 --trust-remote-code --chunked-prefill-size 131072 --disable-radix-cache` | `--tp 8 --trust-remote-code` |

Llama 4 Maverick

| Attribute | AMD MI300X | NVIDIA H100 |

|---|---|---|

| Inference Engine | vLLM 0.8.5.dev679+gd066e5201 | vLLM 0.8.5.post1 |

| Model Quantization | FP8 | FP8 |

| Operating System | Ubuntu 22.04.5 LTS | Ubuntu 22.04.5 LTS |

| Model Framework | PyTorch 2.7.0 | PyTorch 2.8.0 |

| GPU Acceleration Framework | ROCM-SMI 3.0.0+03a4530 | CUDA 12.8.1 |

| Inference Configurations | `--tp 8 --no-enable-prefix-caching --max_num_batched_tokens 16384 --max_num_seqs 1024` | `--tensor-parallel-size 8 --max-model-len 390000` |